how to query and store meta data of your semantic model with sempy and dax info functions.

introduction.

The new native DAX DMV functions are quite interesting, since they simplify the querying of a semantic model’s meta data. Thus, they can tremendously enhance our capabilities for documenting and monitoring. For the ones that have worked with DMVs in Analysis Services or Power BI before, these new INFO DAX functions expose the TMSCHEMA DMVs. Here a way on how you can call them in the DAX query view:

Unfortunately, at time of writing this post, you could not use those functions i.e. in calculated tables or columns in order to process and use the data further. In this demo, one way being suggested is to copy and paste the data from the DAX query view to wherever you need them. Another way could be to connect to the Power BI semantic model and run the INFO DAX functions against it. We have used a very similar approach when querying the DMVs straight from Power Query. You might wanna follow that article but instead of using the DMV Query syntax, you could just shoot those DAX INFO functions. Here a screenshot on an example, but more information can be found in the article:

In this article here, I’ll show you yet another way. We will be utilising Sempy in a Fabric notebook from where we run the INFO DAX queries. Then we push the meta data into a Lakehouse and finally build a semantic model on top of it. I also do not dislike the idea of having the meta data separate from the actual semantic model. Of course, you could even combine the meta data of several semantic models pushing your governance to the next level.

Once again, the approach here is indeed a viable alternative to querying the DMVs directly via Power Query.

prerequisites.

1. A Fabric capacity and workspace

2. A Fabric Lakehouse, the one in this article is called AdventureWorks_DMV_Lakehouse

plan of action.

1. What’s the goal?

The goal is to load the meta data of your semantic model into a Lakehouse via a Fabric Notebook. After, we create a semantic model on top of it using direct lake:

Next, we specify the name of our semanticmodel as well as the DMVs we’d like to query and save in the Lakehouse. Obviously, it is a stretch to load in all of them, as we did below. Feel free to just use the ones you actually need. Note, the DMV DATACOVERAGEDEFINITIONS is commented out (Line 17) since this one threw an error (both via the Python code and in Power BI Desktop):

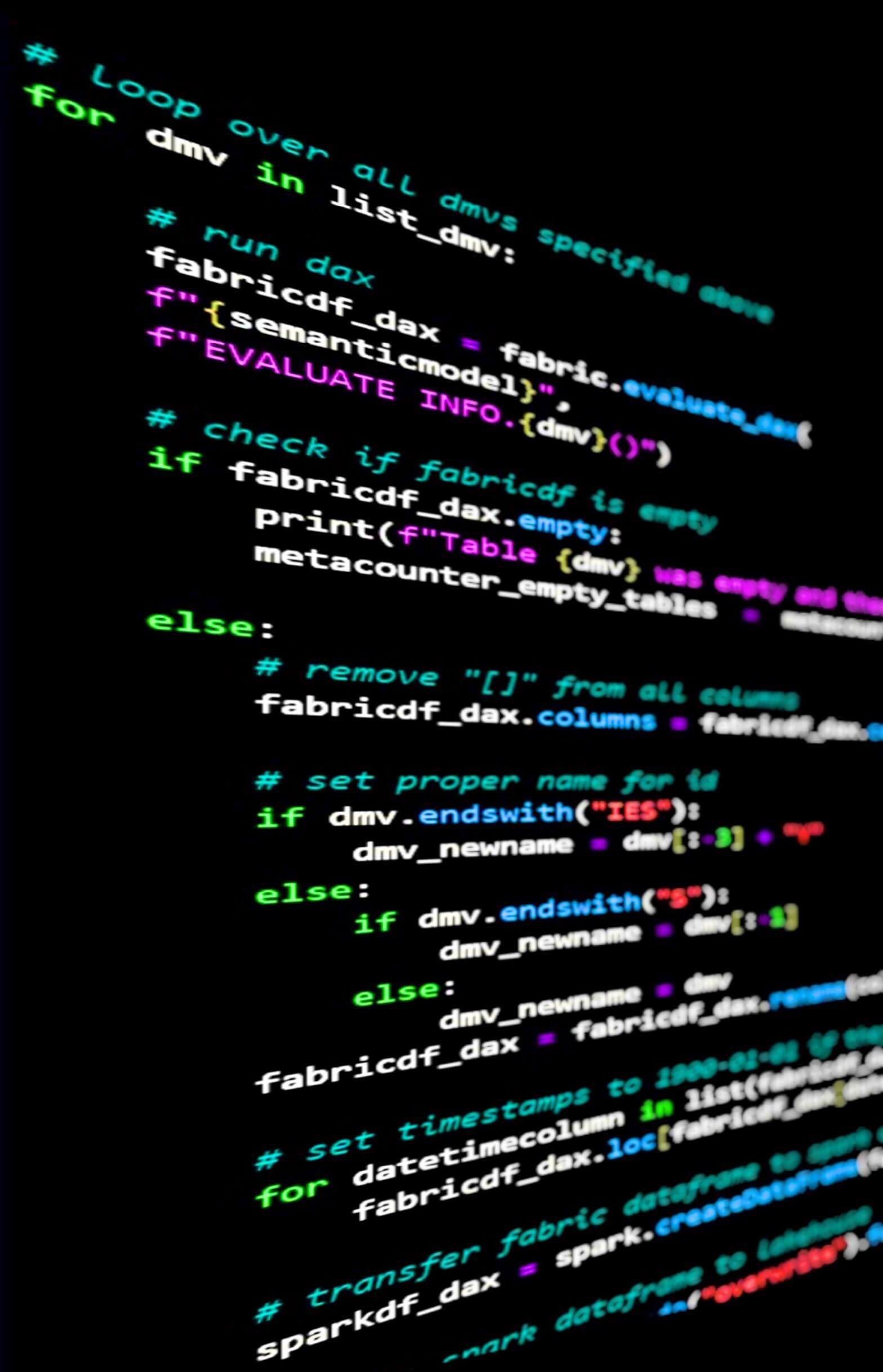

Now, this snippet does the magic. It loops over the DMV list defined above, does some cleansing and finally loads the data into the attached Lakehouse:

3. Showtime

This is how it shall look like, when running the script. On the left, we can see the tables that have been created and loaded by the Notebook. In addition, the script logs which tables got loaded, too. Note, empty tables are ignored.

After running the script, you can refresh the Tables folder in your Lakehouse:

Browsing to the SQL endpoint, this is how your meta semantic model could look like:

end.

As seen in the appendix, I also created a semantic model in Power BI connecting to the data via the Warehouse SQL endpoint. I did this so I can share a file with you, if you are interested in taking a glimpse at the meta data of the DMVs. In that case, just comment below and I’ll send you the pbix file via an e-mail. Note, about half of the tables were empty though.

Further, I’d like to refer to the guys from dax.guide providing documentation about those functions (and many more). Also, I have not created a report on top of the data, yet, but for inspiration purposes I wanna refer to the Power BI report that I created some time ago on the basis of DMVs directly queried from Power Query. Of course, you could also run the old-school DMVs directly in a Fabric Notebook, as Phil Seamark has shown. Probably, the biggest goodie of doing it with the new DAX Info functions instead: You can join them straight in the DAX statement which provides you with a direct way of denormalizing them:

appendix.

Below, the whole model containing all the DMV tables that returned a result for my semantic model. Once again, loading all those tables is probably overkill, especially since there is quite some normalization going on. Loading them into Power BI “as they are” is definitely not a best practice. Normally, you would want to do some kind of modelling. In fact, you could actually directly do this transformation in the Notebook as you can join the DMVs in the DAX query itself (see screenshot above). This is is a really cool one in my opinion!

For some initial exploration of the meta data, I guess it’s alright to load the model as shown below. For a more sustainable solution, however, I do recommend to only consider the tables you have a need for. Also, consider some denormalization if appropriate.

Once again, I do have a local copy of the semantic model shown below. If you’d like to get hold of it, leave a comment or shoot me a contact text. I ‘ll then send you the pbix in an e-mail. With the file, you can dig into the data yourself to see what those DMVs bring to the table. Spoiler alert: There is loads!