how to pre-warm your power bi semantic model from fabric data pipelines.

introduction.

For me personally, Direct Lake was the one of the biggest wow! with the announcement of Fabric and I am sure there are many out there thinking the same. I do not wanna go too deep into how the new storage mode works, but it is worth noticing that Power BI still needs to page data into memory when using Direct Lake. Chris Webb has written an awesome article where he explains the different states (or “level of hotness” as he calls it) of a semantic model when a DAX query is run. The more artefacts (column segments, dictionaries, index joins) already are paged into memory to return the result for a specific query, the warmer we’d consider the state. In turn, the higher that temperature, the better is the possible query performance. Data-Marc published also a great post about this topic, absolutely worth a read!

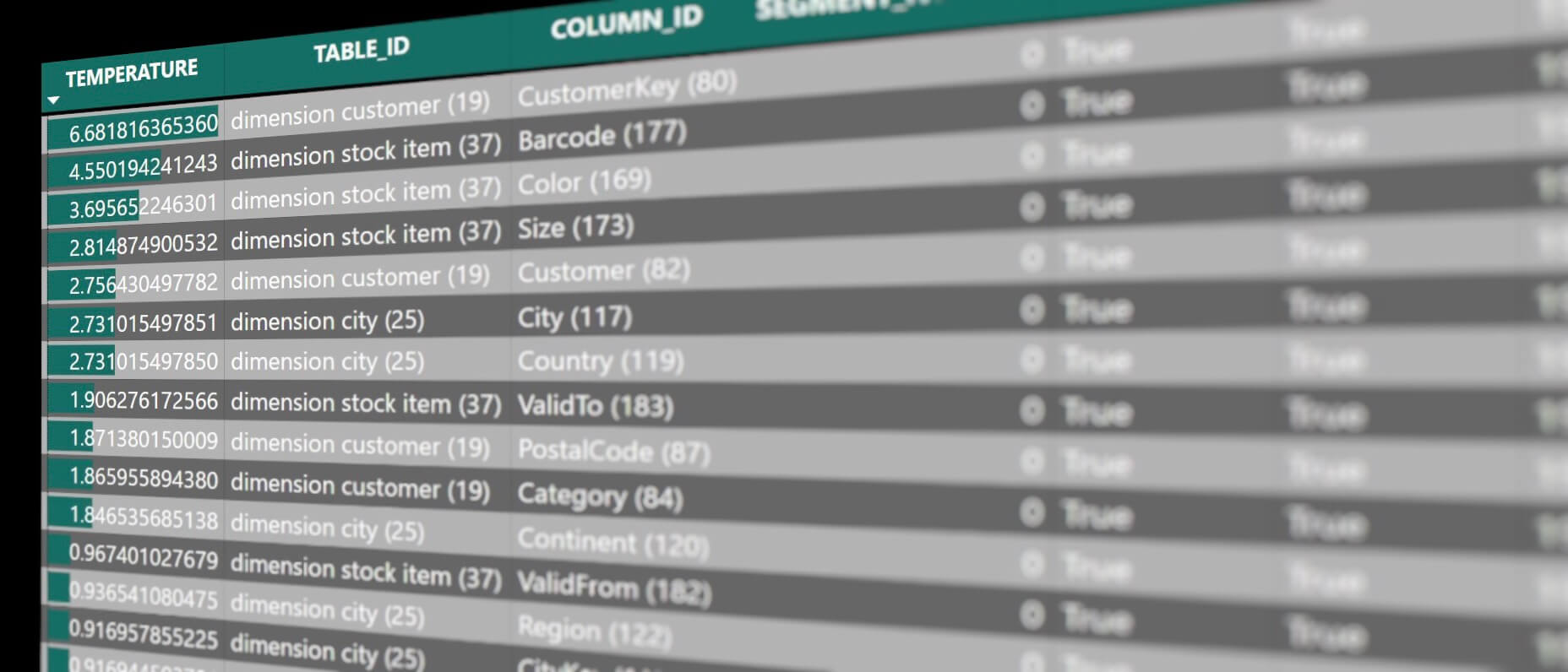

In fact, we can check out the temperature of a column with a couple of DMVs, i.e. the DISCOVER_STORAGE_TABLE_COLUMN_SEGMENTS:

The model displayed in the picture above has not been queried, which you can see in the empty temperature column. This state we’d consider cold. After playing around a bit in a Power BI report referencing this semantic model, the picture looks slightly different:

The column segment for the column City in the dimension city table, now has a value in the temperature attribute.

I myself have only seen some minor differences on performance when querying cold vs warm states of my model, but I have hardly had any data to play around with. However, there are already some fantastic articles out there that do an analysis on querying cold vs warm states and even explaining in more detail the concepts behind it.

What I want to show you in this blog article here, is a way to automatically warm up the cache as part of a data pipeline. One use case: Say you have a larger semantic model that is used across the globe and you would like users in the US to have their data in memory right at the start of their working day. All you would need to do is to create a pipeline as shown in this article here and schedule to run it before people get into office.

The idea of pre-warming the cache is nothing revolutionary and has already been shown by fabric.guru (via a Python script using the ExecuteQueries API) as well as Guy in a Cube (via semantic link). Still, I thought, I’d show you another way on how to do this in fabric data pipelines as this might be the tool you are using for orchestrating your workloads.

Also as a disclaimer, I am not sure whether this is the best way of doing such pre-warming. But I suppose it is a way.

In addition, my guts say, cache warming could be counterproductive, if done poorly or too frequently. So I recommend to do proper testing and to not implement this one proactively, but more if you actually experience performance issues.

prerequisites.

1. A Fabric capacity and workspace

2. A Data pipeline

3. A semantic model in Fabric

4. A data connection to the Power BI REST API endpoint. Check out this blog post on how to do this.

plan of action.

1. What’s the goal?

As already seen in the introduction, we’d like to increase a specific column’s temperature as part of a data pipeline, so the data gets into memory and query latency decreases.

2. Build the pipeline

Once again, you need to have a Power BI REST API connection in place. A walkthrough on how to set this up can be found here. Note, at the time of writing this blog post, I found the executeQueries API we are calling here does not work with a service principal, but only via the OAuth2.0 authentication method. In fact, a service principal did work on an import mode model, but if the model was in Direct Lake mode we had to use OAuth2.0. I suppose under the hood there is some Fabric stuff going on that does not (yet?) work with a service principal. I elaborated a bit on this issue in the article on how to use the new Fabric REST APIs, too.

We get started by grabbing a web activity in our data pipeline and selecting the previously created web V2 connection from the dropdown. Then, we fill in the rest as per the picture below:

Relative URL: groups/[WorkspaceID]/datasets/[SemanticModelID]/executeQueries (you can find the WorkspaceID and SemanticModelID in the URL bar of your browser by clicking on the semantic model in Fabric, similar to here)

Method: POST

Body:{“queries”:[{“query”:”EVALUATE VALUES(‘dimension_city'[city])”}]}

Headers: content-type as Name and application/json as Value

Note, for the DAX query in the body, we chose a column reference in order to load the data into memory. Important to keep in mind is that the data pipeline web activity can only return data for up to 4MB in the output. If the response exceeds 4MB, an error is thrown. It seems, however, that even if that error pops up, the query itself is successfully sent to the engine. As a result, the pre-warming still works. I guess, you wanna use a DAX statement that successfully pages in the correct and complete data, but returns a small amount as a response. Perhaps a COUNTA could work for this matter.

3. Showtime

Before we execute the pipeline, let’s quickly check the temperature of our column segments:

Alright, the temperature column is empty. Let’s run the pipeline:

The subsequent check on the temperature reveals that our warming procedure has worked.

Actually, we could check this DMV straight from the data pipeline as well, as we have shown in this article before. It might be a weird one to query a DMV from a data pipeline, but one could actually utilise this to check against a self-set temperature threshold for certain columns and in case it is below, you kickstart the pre-warming procedure from above:

Relative URL: groups/[WorkspaceID]/datasets/[SemanticModelID]/executeQueries (you can find the WorkspaceID and SemanticModelID in the URL bar of your browser by clicking on the semantic model in Fabric, similar to here)

Method: POST

Body:{“queries”:[{“query”:”SELECT TABLE_ID, COLUMN_ID, SEGMENT_NUMBER, ISPAGEABLE, ISRESIDENT, TEMPERATURE, LAST_ACCESSED FROM $System.DISCOVER_STORAGE_TABLE_COLUMN_SEGMENTS”}]}

Headers: content-type as Name and application/json as Value

Here the output:

end.

So, this is one possible way of warming up the cache in a targeted and automated manner. Lastly, I wanna refer to the documentation of the executeQueries API, since there are a few limitations that are worth a note.