how to query and copy data from power bi semantic models (former datasets) via azure data factory.

introduction.

Recently, I learned about the possibility to query Power BI semantic models from other tools than Power BI itself. You can query all sorts of data – obviously all tables in the model but also a data model’s meta data. We utilised this when creating a meta report in Power BI. You can also hit these queries from an Azure Data Factory to retrieve and work with the data (actually you can do this from any client even from data visualisation tools like Tableau). This also implies, you can copy the data to somewhere else, i.e. into a database or a file storage. From an enterprise data warehouse perspective, I normally see Power BI as the last puzzle piece – and with Power BI, I mean both the tabular / semantic model as well as the report layer. More specifically, we load the data into a Modern Data Warehouse or Lakehouse from which Power BI pulls the data for end consumption. Why would we then want to query and move the data from Power BI to another storage? I have not come up with too many scenarios, but one obvious one could be to simply share data from a well modelled Power BI semantic model. Another one is to retrieve a semantic model’s meta data in order to combine it with other meta data from other parts of your data platform. You could then build a customized and holistic data governance report covering your data architecture as a whole (including data lineage, data objects etc.). Lastly, you might want to fetch data that has solely been written into the Power BI semantic model: For instance, when data is brought into Power BI from third party sources like web API calls, power automate or custom visuals. By utilizing this “write back” alternative regularly, you are able to save the historic data. In the following, there is a walk-through on how to query a published Power BI semantic model as well as copying the data into an Azure SQL database.

prerequisites.

1. A Power BI premium capacity or a PPU license. XMLA endpoints can only be used on premium workspaces

2. A semantic model published to a premium workspace

3. An Azure Data Factory

4. An Azure SQL Database

plan of action.

1. What is our starting point?

2. Query data from Power BI with Web Activity

3. Copy data from Power BI semantic model with Copy Activity

1. What is our starting point?

Let’s quickly recap the prerequisites.

A premium workspace in Power BI with a semantic model:

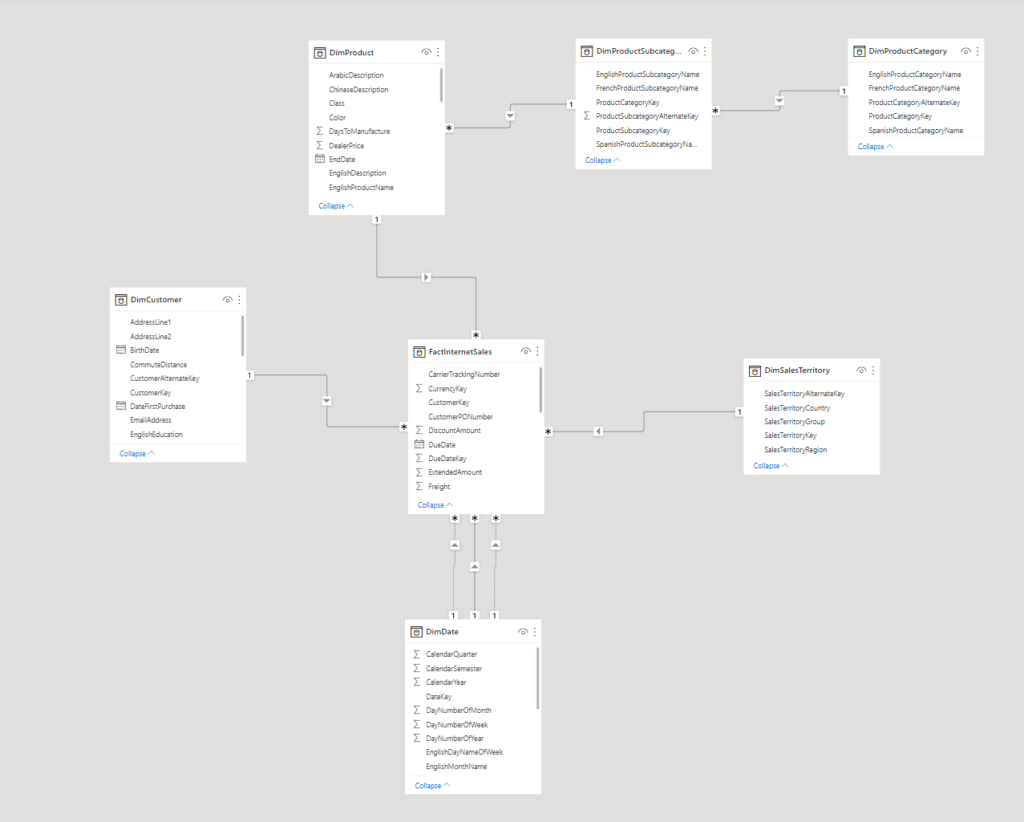

Our model looks like this:

We make use of the large AdventureWorks sample in an Azure SQL Database. If you would like to find out how to add the real AdventureWorks sample (and not the lightweight version) to an Azure SQL database, make sure to check out this blog post.

2. Query data from Power BI with Web Activity

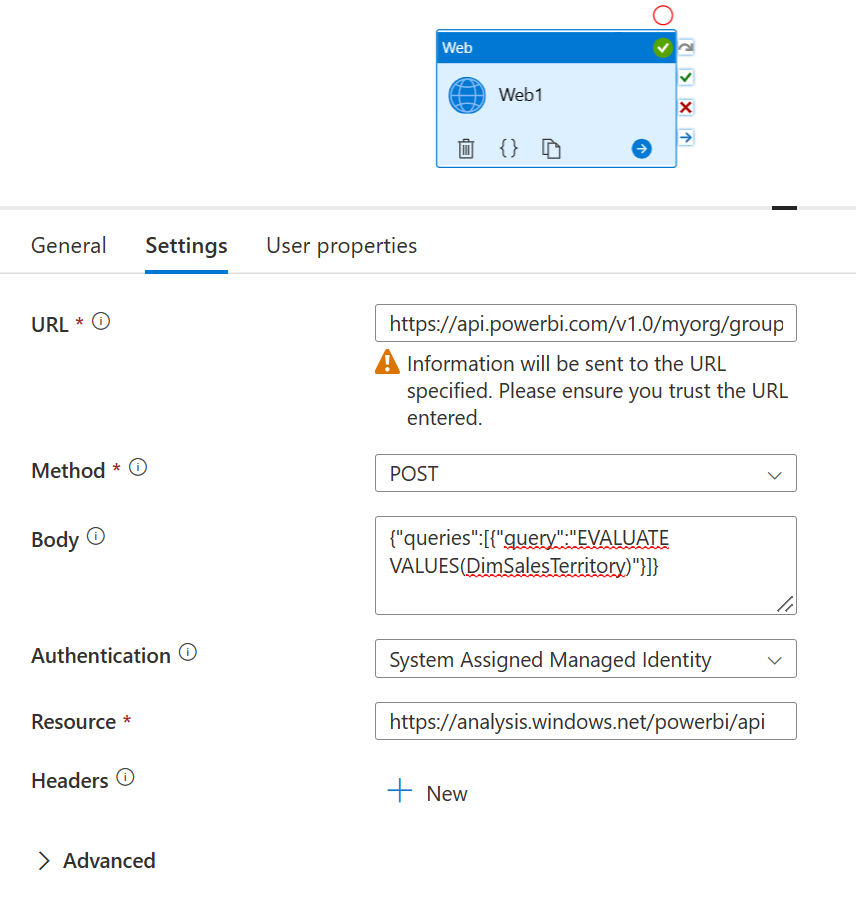

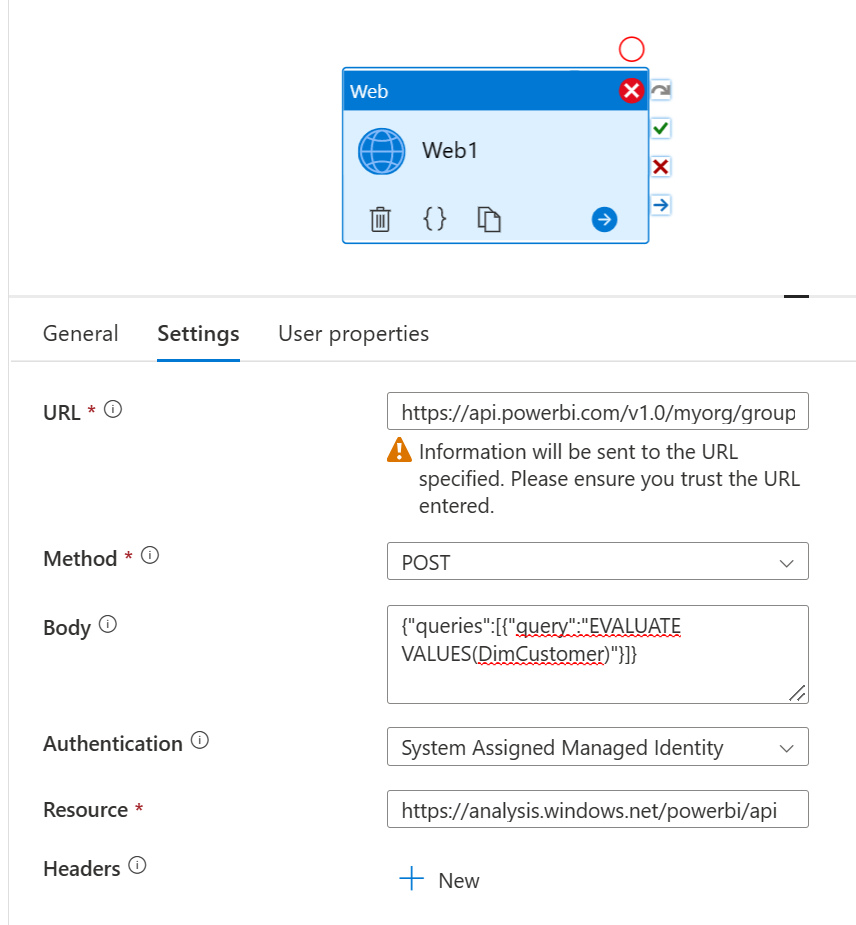

We start off by querying the DimSalesTerritory table with a normal Web Activity via the executeQueries command. Below the Settings:

The URL follows this pattern:

https://api.powerbi.com/v1.0/myorg/groups/[workspaceID]/datasets/[datasetID]/executeQueries

Both the workspaceID and the datasetID can be copied from the address / URL bar by clicking onto the semantic model in Power BI Service. See here for more information.

The Method is Post

Paste the following into the Body

{"queries":[{"query":"EVALUATE VALUES(DimSalesTerritory)"}]}

Pick System Assigned Managed Identity (Legacy: Managed Identity) as Authentication

Lastly, set Resource to

https://analysis.windows.net/powerbi/api

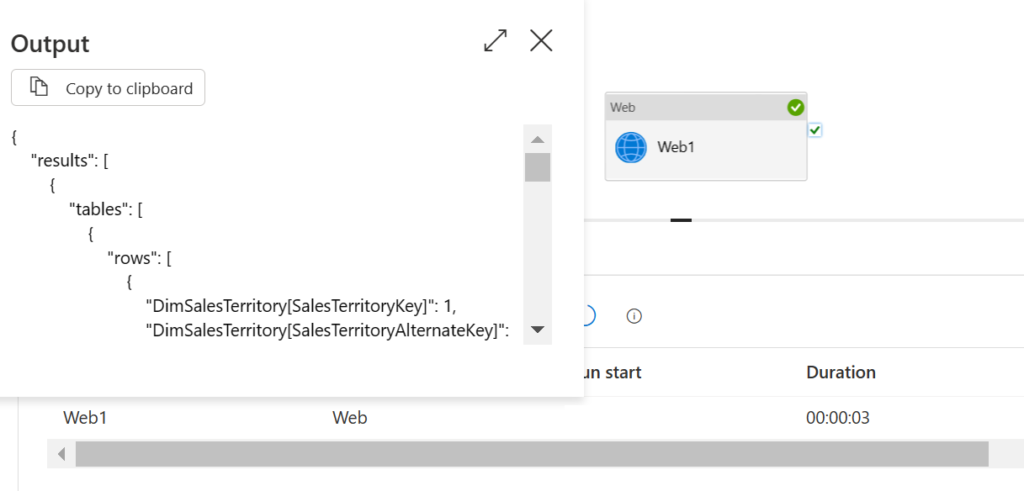

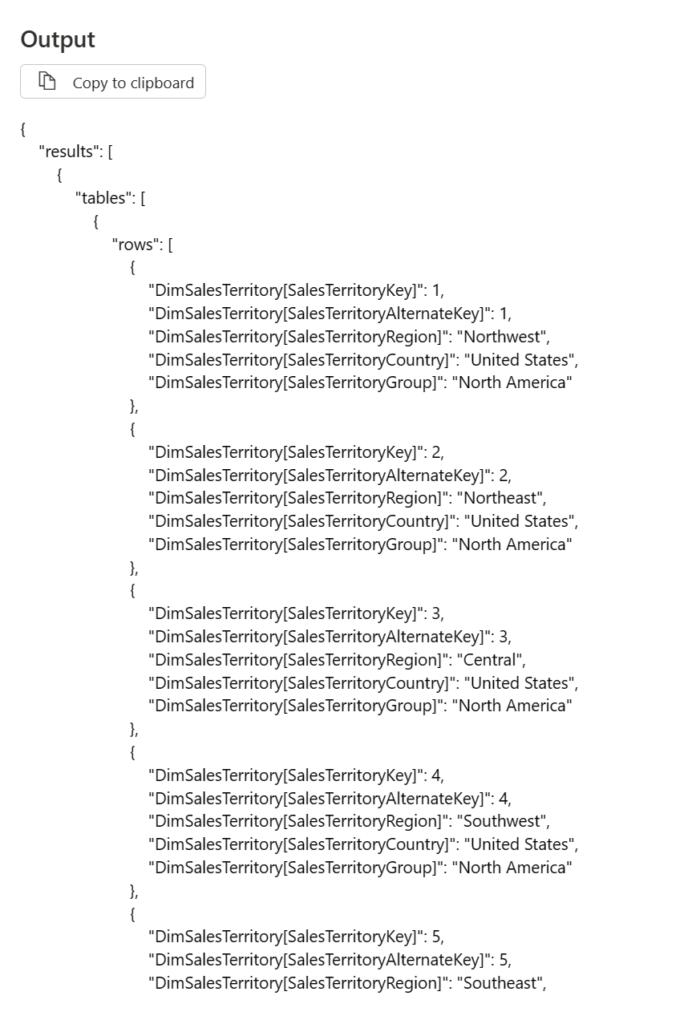

The results after we run it:

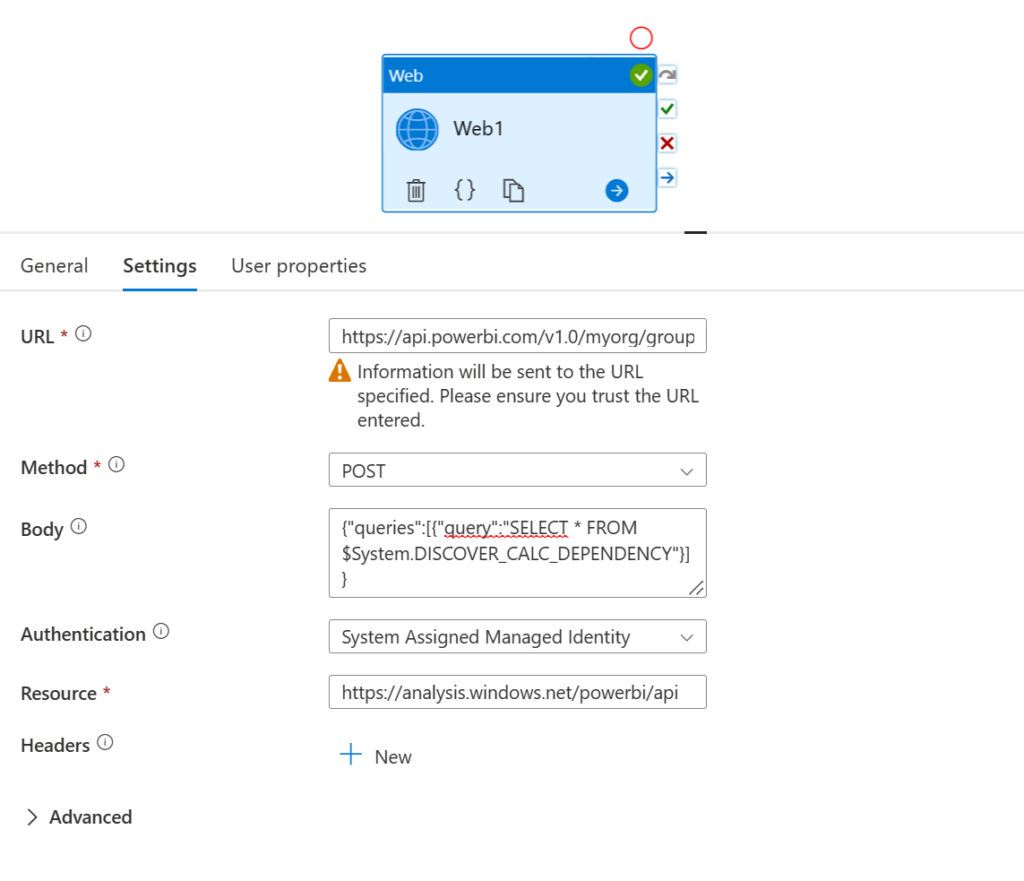

You can also query the data straight from the underlaying meta views. And let me tell you, there are plenty of these so called Dynamic Management Views (DMVs). Check out Microsoft’s documentation for more.

The URL follows this pattern:

https://api.powerbi.com/v1.0/myorg/groups/[workspaceID]/datasets/[datasetID]/executeQueries

Both the workspaceID and the datasetID can be copied from the address / URL bar by clicking onto the semantic model in Power BI Service. See here for more information.

The Method is Post

Paste the following into the Body

{"queries":[{"query":"SELECT * FROM $System.DISCOVER_CALC_DEPENDENCY"}]}

Pick System Assigned Managed Identity (Legacy: Managed Identity) as Authentication

Lastly, set Resource to

https://analysis.windows.net/powerbi/api

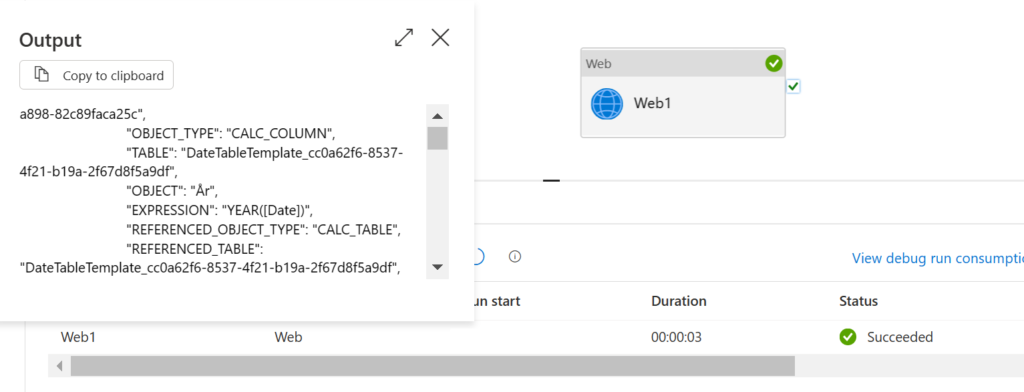

Let’s run the pipeline.

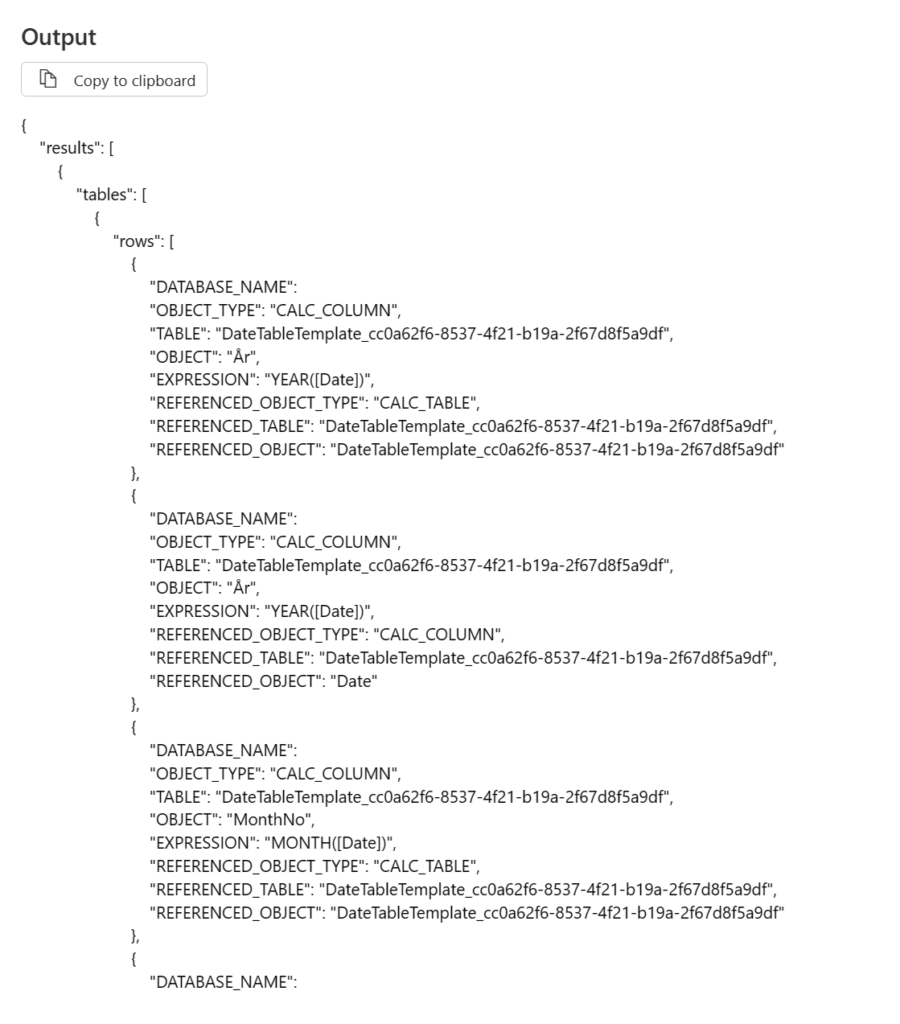

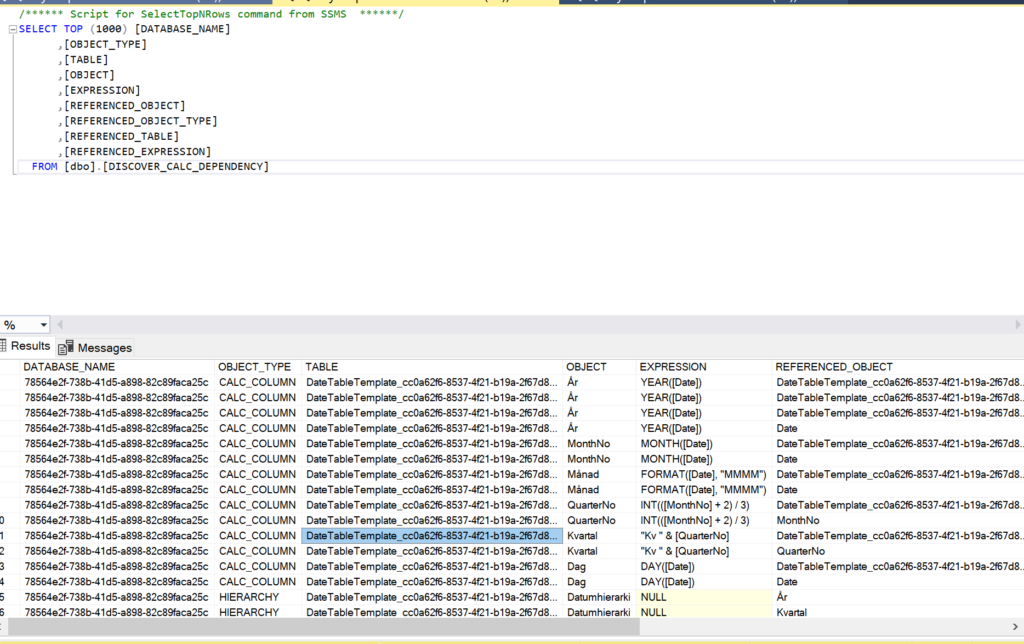

And here the result in a more structure way:

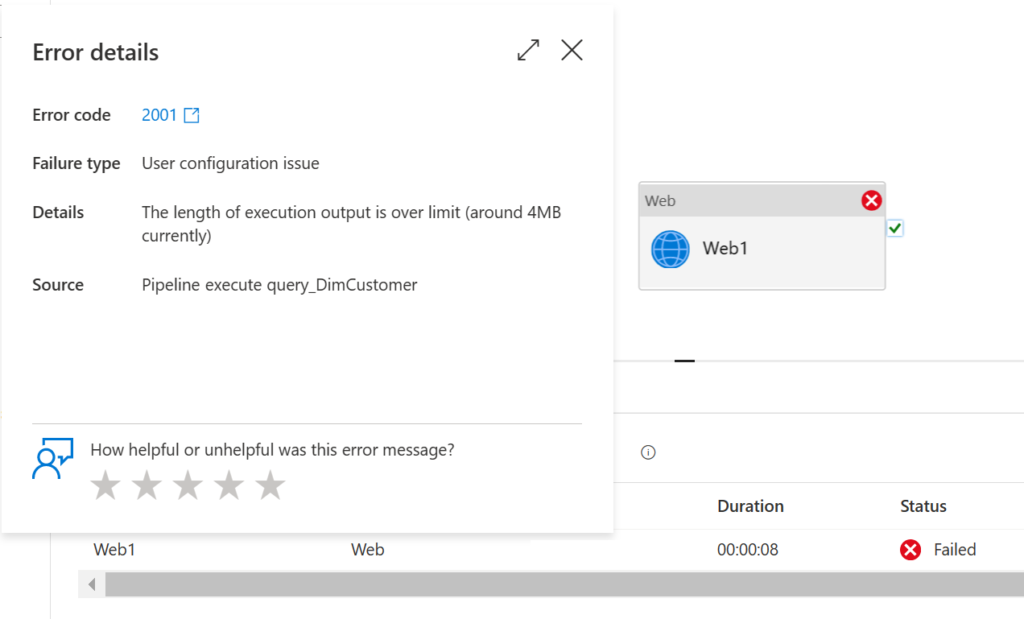

One interesting thing to point out is that Azure Data Factory runs into problems with returned data exceeding 4mb. This error pops up when querying larger tables, i.e. the DimCustomer table from the AdventureWorks sample. Interesting enough, it still is possible to copy the whole DimCustomer table into Azure SQL Database (see example below) or supposedly any other target data store. So this appears to just be a limitation for the Web Activity but not for the Copy Activity.

The URL follows this pattern:

https://api.powerbi.com/v1.0/myorg/groups/[workspaceID]/datasets/[datasetID]/executeQueries

Both the workspaceID and the datasetID can be copied from the address / URL bar by clicking onto the semantic model in Power BI Service. See here for more information.

The Method is Post

Paste the following into the Body

{"queries":[{"query":"EVALUATE VALUES(DimCustomer)"}]}

Pick System Assigned Managed Identity (Legacy: Managed Identity) as Authentication

Lastly, set Resource to

https://analysis.windows.net/powerbi/api

And here the error message resulting when running the pipeline:

3. Copy data from Power BI semantic model with Copy Activity

Now, we want to copy the data from a Power BI semantic model into an Azure SQL Database. The focus here is on the copying part and that you can achieve this with Azure Data Factory. For instance, in an on-prem sql server you actually have the possibility to use native query to move data from Power BI semantic models. Azure SQL Database does not have the native query capability, though.

Here, we use Azure SQL Database just as an example target. Of course, we could have chosen any other linked service as our destination data storage.

Note, I am a big fan of parameterising data factory objects like linked services, semantic models or pipelines in order to scale easily. In the example below, we skip this due to simplicity reasons.

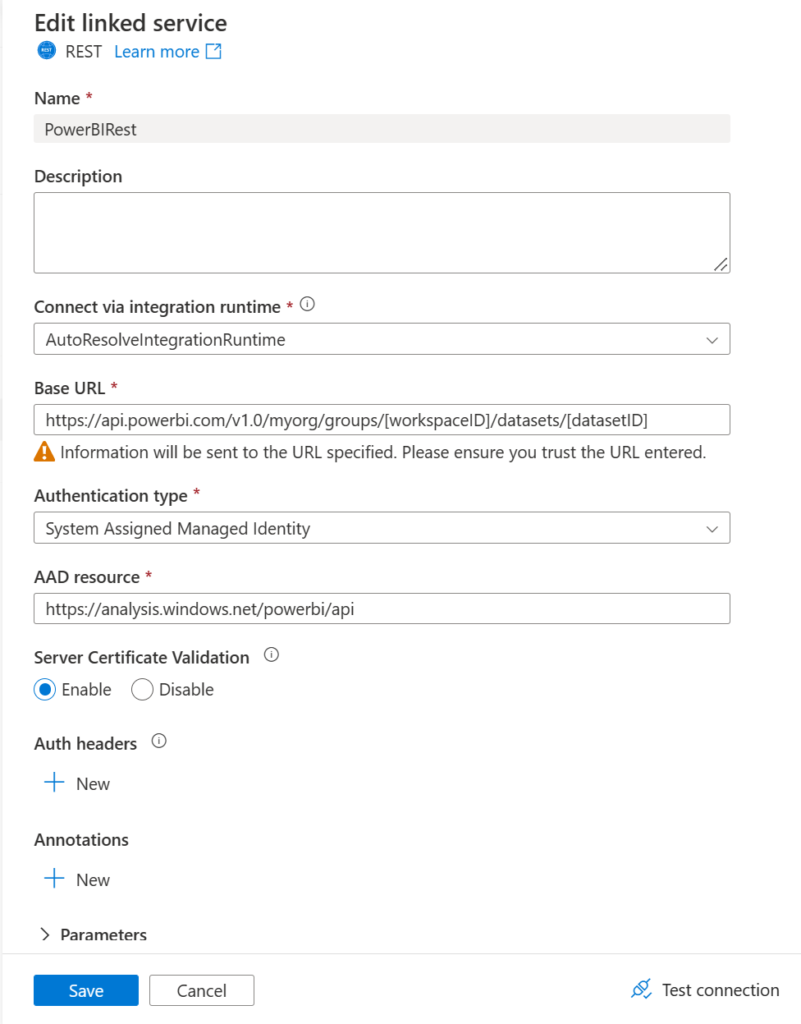

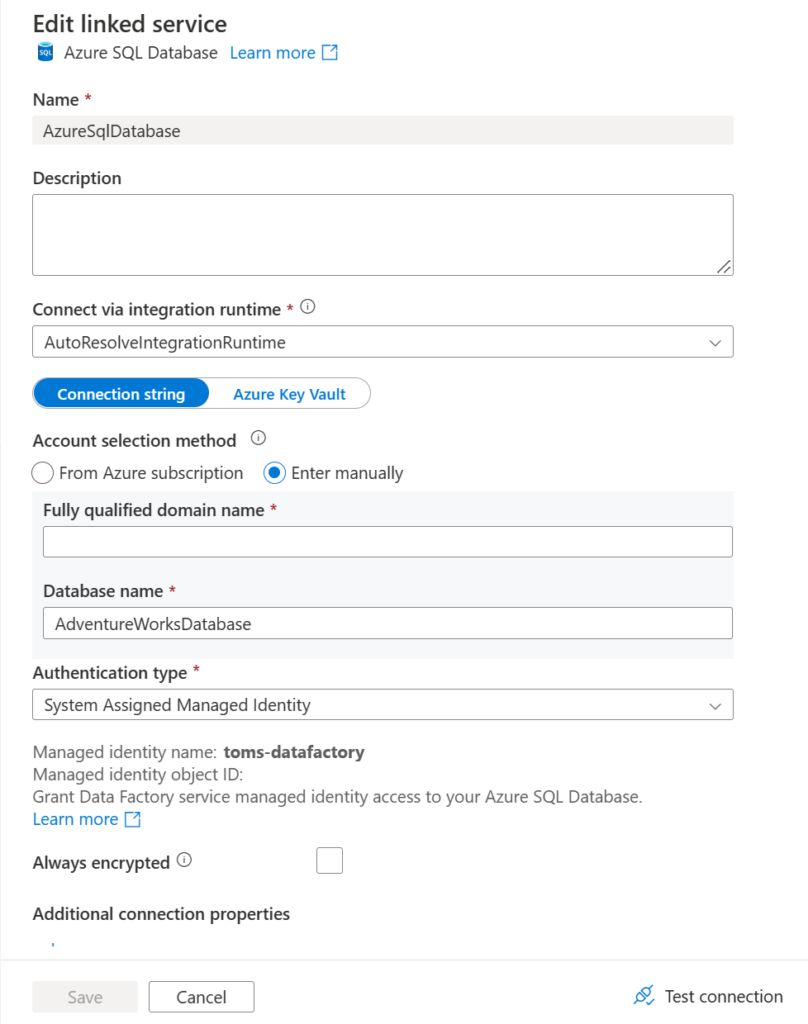

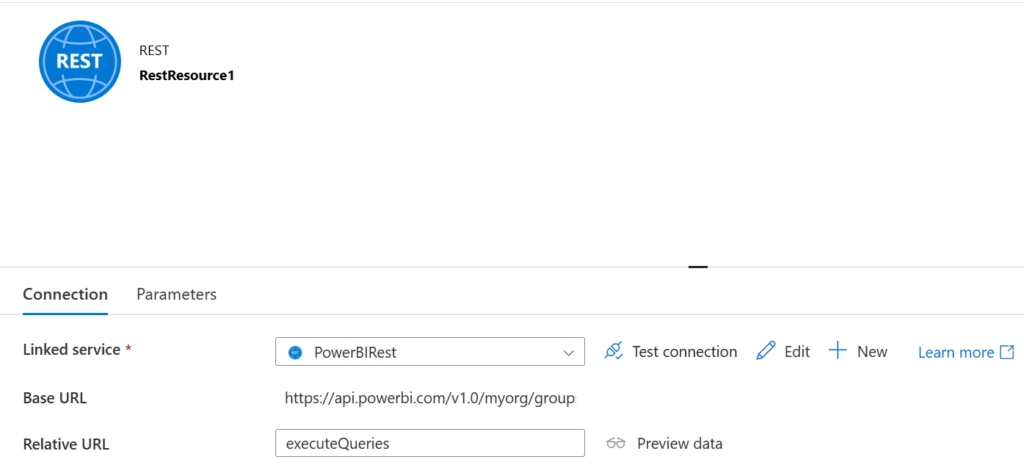

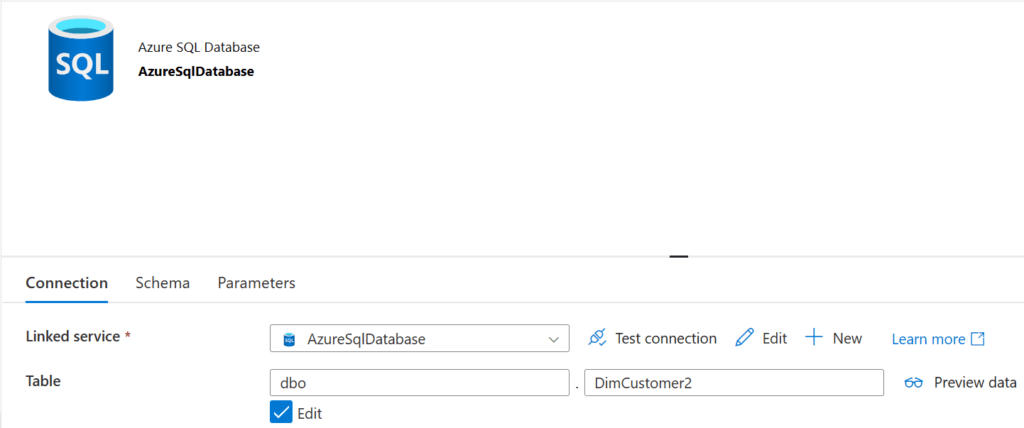

Let’s start by creating the linked services: One REST and one Azure SQL Database:

The semantic models look like this. Note, we named the table dbo.DimCustomer2 since there is already a dbo.DimCustomer table in our database.

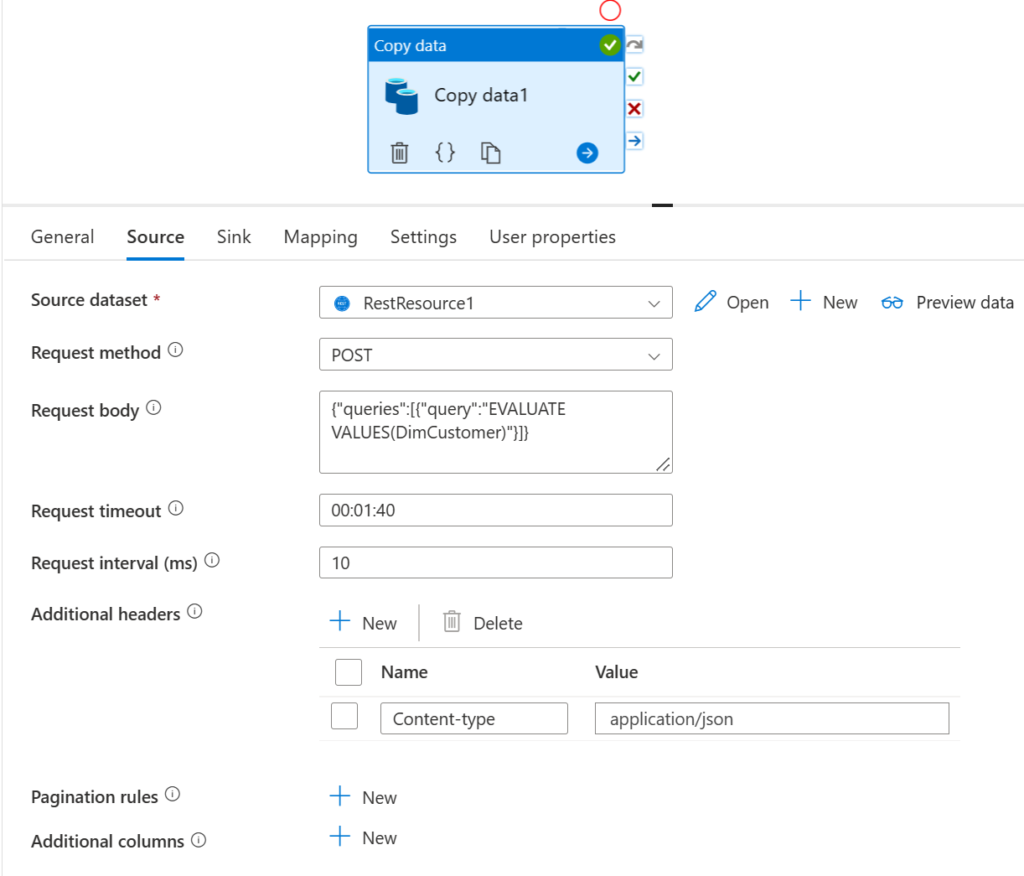

Next, we create the pipeline with a Copy Activity and the following Settings, starting with Source:

Pick RestResource1 as the Source dataset

The Request Method is Post

Paste the following into the Body

{"queries":[{"query":"EVALUATE VALUES(DimCustomer)"}]}

Lastly, add an addtional header with the name Content-type and the Value application/json

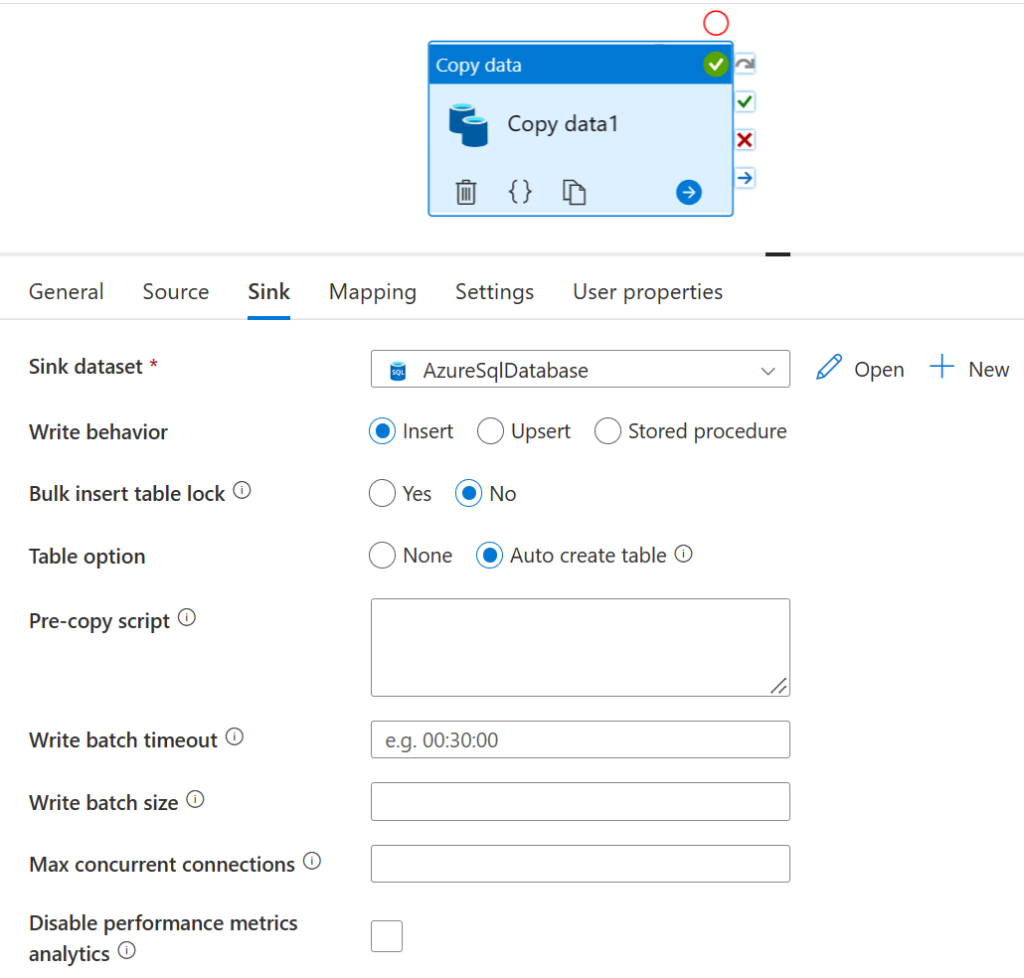

The Sink settings are as follows:

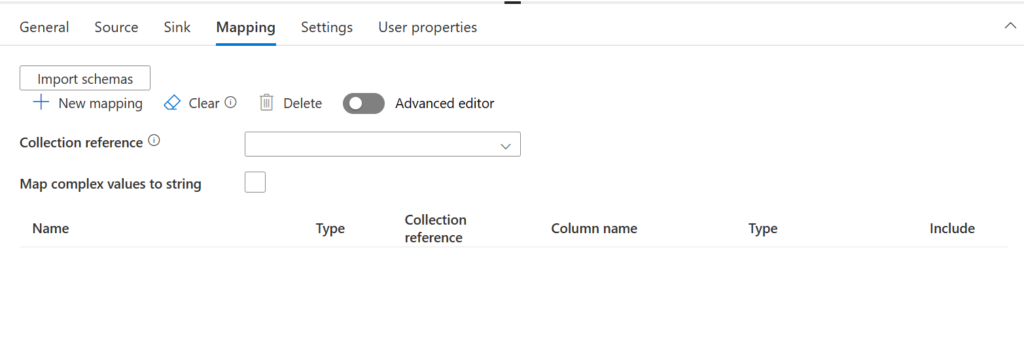

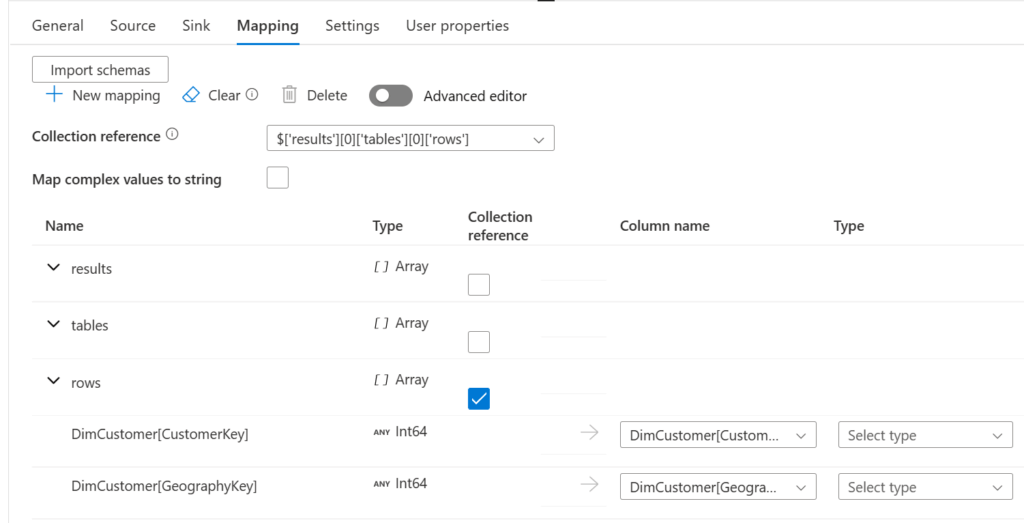

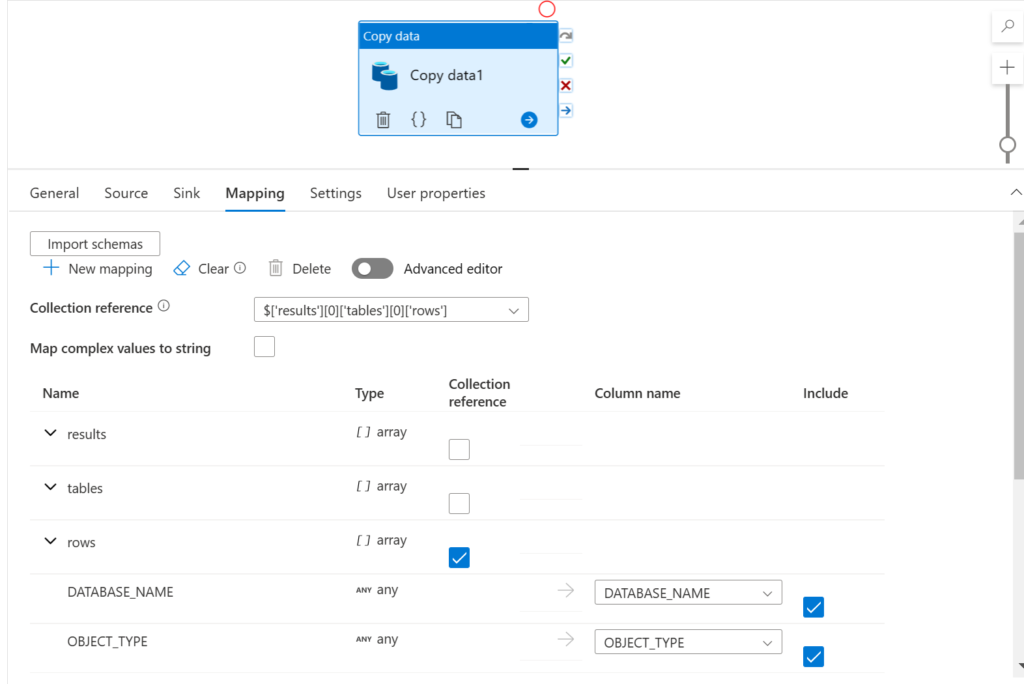

For the Mapping do the steps below:

At first, click on Import schemas:

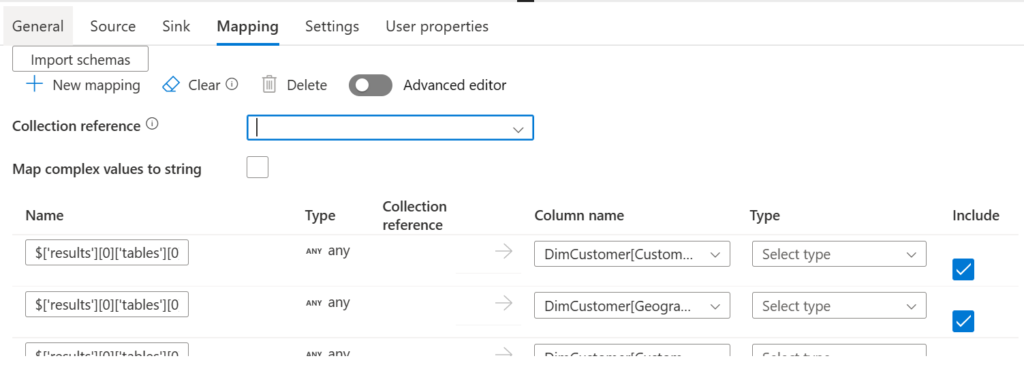

We end up with the screen below, which is not the schema we eventually want.

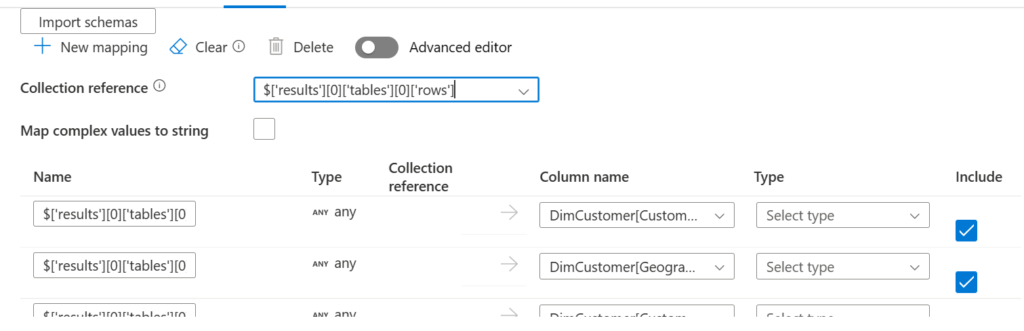

Paste the following code into Collection reference:

$['results'][0]['tables'][0]['rows']

Then press Import schemas, once more and you should end up with the correct schema:

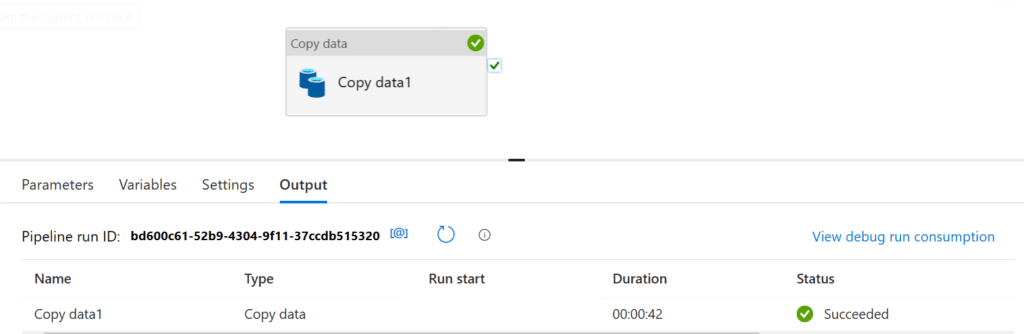

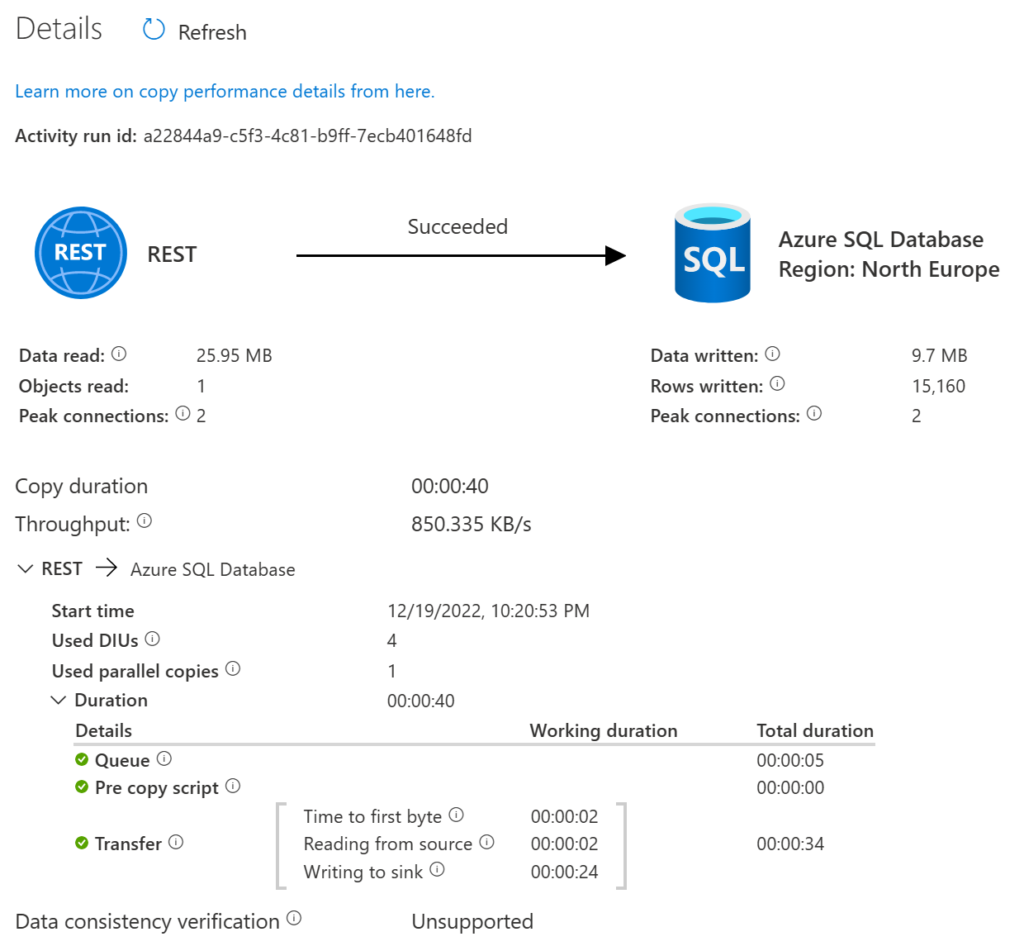

Now, we can trigger a pipeline run:

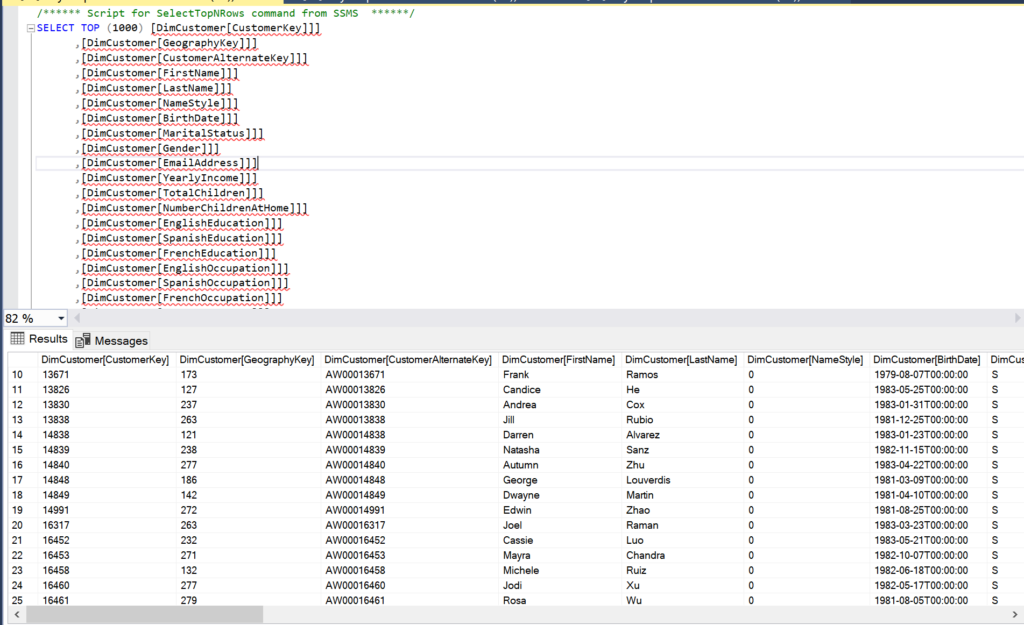

Let’s check the result in the Azure SQL Database, too. We can see that a new object called dbo.DimCustomer2 appeared:

And here the result set in the database.

Lastly, another example for the DISCOVER_CALC_DEPENDENCY meta view:

end.

And here we are: We have successfully fetched data from Power BI and even copied it into a database. Are you aware of any use cases other than the ones mentioned in the introduction? Perhaps, you have already used this or a similar approach to pull data from Power BI to store it somewhere else? Please, feel free to let us know by commenting below. :)

This is useful. We are able to run only “System.DISCOVER_CALC_DEPENDENCY”. Not able to run other DMVs like “$SYSTEM.DISCOVER_STORAGE_TABLE_COLUMN_SEGMENTS” or anything else. did you get a solution for this?

Hi Hariharan,

Thanks for your message!

You are right. I realised the same thing. I think it has to do with what it says here:

“For Power BI Premium datasets, DMVs for querying through the XMLA endpoint are limited to those that require database admin permissions. Some DMVs are not supported because they require Analysis Services server admin permissions.”

https://learn.microsoft.com/en-us/analysis-services/instances/use-dynamic-management-views-dmvs-to-monitor-analysis-services?view=asallproducts-allversions

Here are all the ones that I was able to query (no guarantee for completeness though). Note, not all of them had data:

DBSCHEMA_CATALOGS, DBSCHEMA_COLUMNS, DBSCHEMA_TABLES, DISCOVER_CALC_DEPENDENCY. DISCOVER_COMMAND_OBJECTS, DISCOVER_COMMANDS, DISCOVER_OBJECT_ACTIVITY, DISCOVER_PROPERTIES, DISCOVER_SESSIONS, MDSCHEMA_CUBES, MDSCHEMA_DIMENSIONS, MDSCHEMA_HIERARCHIES, MDSCHEMA_MEASURES, MDSCHEMA_MEMBERS, MDSCHEMA_PROPERTIES, MDSCHEMA_SETS, TMSCHEMA_ANNOTATIONS, TMSCHEMA_ATTRIBUTE_HIERARCHIES, TMSCHEMA_CALCULATION_ITEMS, TMSCHEMA_COLUMN_PERMISSIONS, TMSCHEMA_COLUMNS, TMSCHEMA_CULTURES, TMSCHEMA_DATA_SOURCES, TMSCHEMA_DETAIL_ROWS_DEFINITIONS, TMSCHEMA_EXPRESSIONS, TMSCHEMA_EXTENDED_PROPERTIES, TMSCHEMA_FORMAT_STRING_DEFINITIONS, TMSCHEMA_HIERARCHIES, TMSCHEMA_KPIS, TMSCHEMA_LEVELS, TMSCHEMA_LINGUISTIC_METADATA, TMSCHEMA_MEASURES, TMSCHEMA_MODEL, TMSCHEMA_OBJECT_TRANSLATIONS, TMSCHEMA_PARTITIONS, TMSCHEMA_PERSPECTIVES, TMSCHEMA_QUERY_GROUPS, TMSCHEMA_RELATIONSHIPS, TMSCHEMA_ROLE_MEMBERSHIPS, TMSCHEMA_ROLES, TMSCHEMA_TABLES, TMSCHEMA_VARIATIONS

Hope this helps :)

/Tom

Yes. Thanks. Need to wait till Microsoft Power BI lift this limitation.

I have been looking for a way to programmatically write this DMV’s to DWH with ADF. I was so happy when I found this, and so disappointed when I saw that almost every usable views requiere Analysis Services server admin to access.

I have all my datasets in one workspace (Shared datasets), so I started with a dataset in Power BI and created a function to invoke a list of all databases. This works as I wanted, but its not possible to refresh the dataset in service because of dynamic source is not supported.

How can we reach out to Microsoft, so it will be possible to access all DMV’s with XMLA? This is important step to have a good way to automatically document the models in Power BI.

Br

Marius :)

Hi Marius,

Thanks for your message! :)

I think you would want to post your idea here:

https://ideas.fabric.microsoft.com/

Feel free to paste back the idea link here, so we can give it an upvote as well!

/Tom

I am getting “DaxByteCountNotSupported” , this is due to table is having more rows and size of table is more than 15MB, have you implemented pagination logic for big table. appreciate if you share the sample pipeline.

Hi Veer,

Thanks for your message. I have not implemented a looping pagination logic yet, but I found this one by Curbal:

https://www.youtube.com/watch?v=fbeMw9jtJB4

Still, I find this might be worth a post in the future :)

/Tom