how to refresh individual tables or partitions in power bi semantic models from fabric data pipelines.

introduction.

With the new “refresh semantic model” pipeline activity (still in preview), triggering refreshes from Fabric Data Pipelines have become a lot easier. This activity, however, does not provide an option to refresh only certain objects of the semantic model, like partitions or tables. Looking into the JSON source code of that pipeline activity, we can see a property called operationType (see picture below). I can imagine, at some point, other operations like the refresh of single tables or partitions and maybe even more, might be possible, who knows… Until then, if we want to refresh only single tables from Fabric Data Pipelines, we can follow a similar setup like we did when we used Azure Data Factory for the same purpose. We’ll demonstrate a similar method in the step-by-step guide below. Another way of achieving the same is by using semantic-link in a notebook that is run by a Fabric Data Pipeline. as Sandeep has shown.

Also, there has been a frequent question of whether the new activity would also work in Azure Data Factory. Well, as per this comment I found on LinkedIn, at least for now, we still need to go for a Web activity. But perhaps, this might change in the future, too. Also, I recommend checking out data-marc’s thoughts on the new activity. As always, he has some interesting takes on it.

prerequisites.

1. A Fabric capacity and workspace

2. A Fabric data pipeline

3. A Power BI semantic model

1. What’s the goal?

The goal is to refresh only certain partitions or tables of a semantic model. Below an example where we just refreshed the table random_dog_table:

2. Set up a new connection

At first, we need to set up a new web V2 connection with which we will call the API later in the pipeline. For this, click on the settings icon in the top right and then Manage connections and gateways.

Next, click on + New

Now, select the Cloud option, provide a name and fill in the other fields according to the picture. When you are done, press the Create button.

Connection type: Web V2

Base Url: https://api.powerbi.com/v1.0/myorg

Token Audience Uri: https://analysis.windows.net/powerbi/api

For the Authentication method, I selected OAuth 2.0. We need to provide our credentials by clicking on Edit credentials in order to be able to create the connection. Here, you log in as a user, and then the data pipeline authenticates to Fabric on your behalf. In a real life example, I strongly recommend using a service principal instead of the OAuth 2.0 method. In the appendix of this other post, I listed the steps necessary for utilising a service principal for authentication.

Note, for the Token Audience Uri as per the documentation, you might also be able to use https://api.powerbi.com. I tried it and was only able to create a connection when choosing Anonymous as the Authentication method. This threw me an error later on in the pipeline though.

Connection type: Web V2

Base Url: https://api.powerbi.com/v1.0/myorg/

Token Audience Uri: https://api.powerbi.com

3. Add Web Activity to your Fabric Data pipeline

We drag a web activity into our data pipeline and select the previously created web V2 connection from the dropdown. Then, we fill in the rest as shown the picture below.

Relative URL: groups/[WorkspaceID]/datasets/[SemanticModelID]/refreshes (you can find the WorkspaceID and SemanticModelID in the URL bar of your browser by clicking on the semantic model in Fabric, similar to here)

Method: POST

Body:

Headers: content-type as Name and application/json as Value

4. Showtime

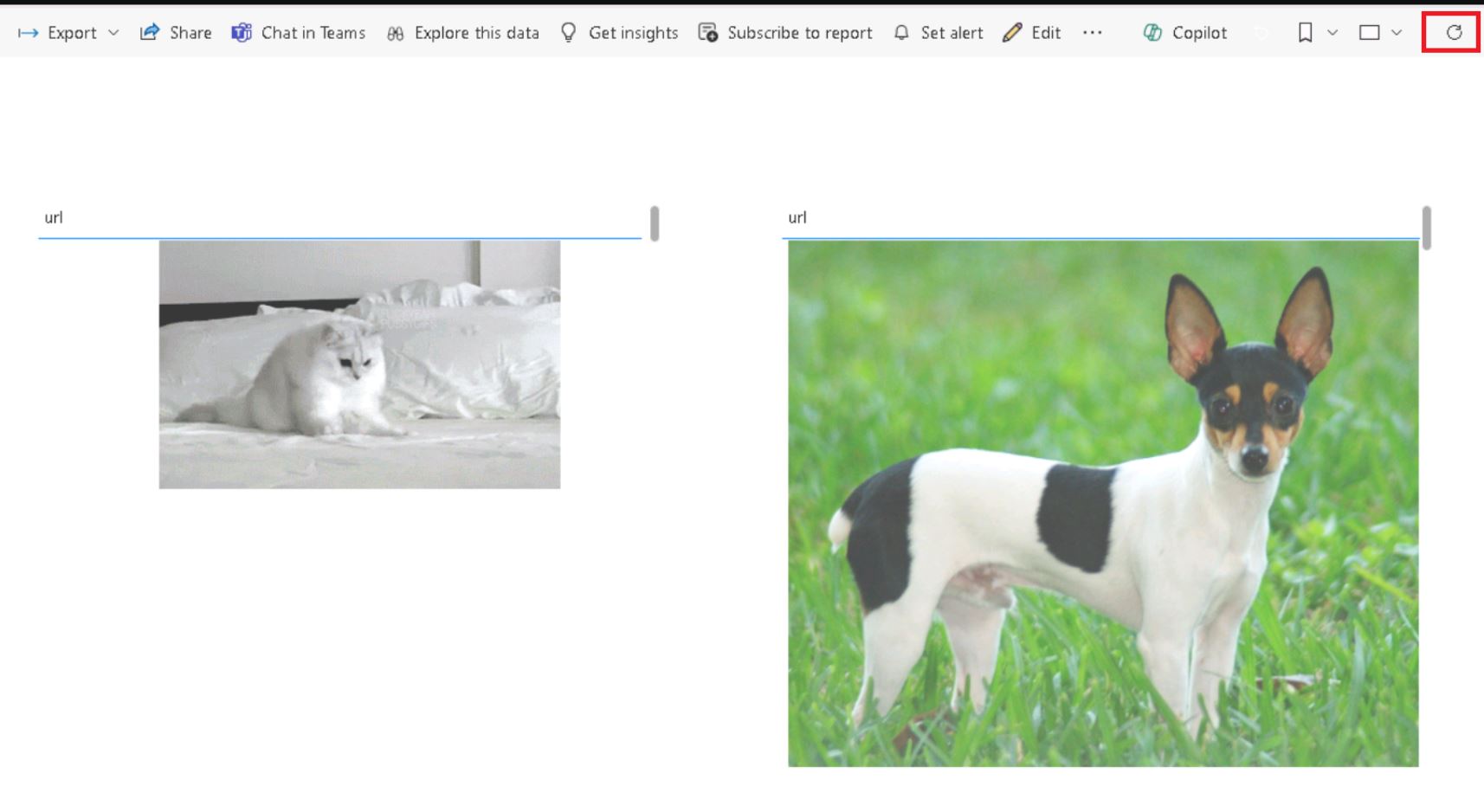

Let’s take a look at our report that contains two tables showing pictures of either a cat or a dog. These pictures are derived from two different tables called random_cat_table and random_dog_table. They, in turn, use different APIs and with every API call another random picture is returned. Check out this article, if you are in need of such easy-to-use data sources.

Now, let’s run the pipeline, which shall only refresh the random_dog_api (and not the random_cat_api):

Let’s head back to our report, where indeed only the dog picture has changed. Be aware, you might need to refresh the cache by clicking on the top right corner. Note, the cat on the left might look a bit different as well. It is still the same cat picture, I promise. It’s just been a GIPHY and the screenshot below captures the cat in another moment of its movement. Either way, it’s a cute one, isn’t it? Oh and the dogs as well! :-)

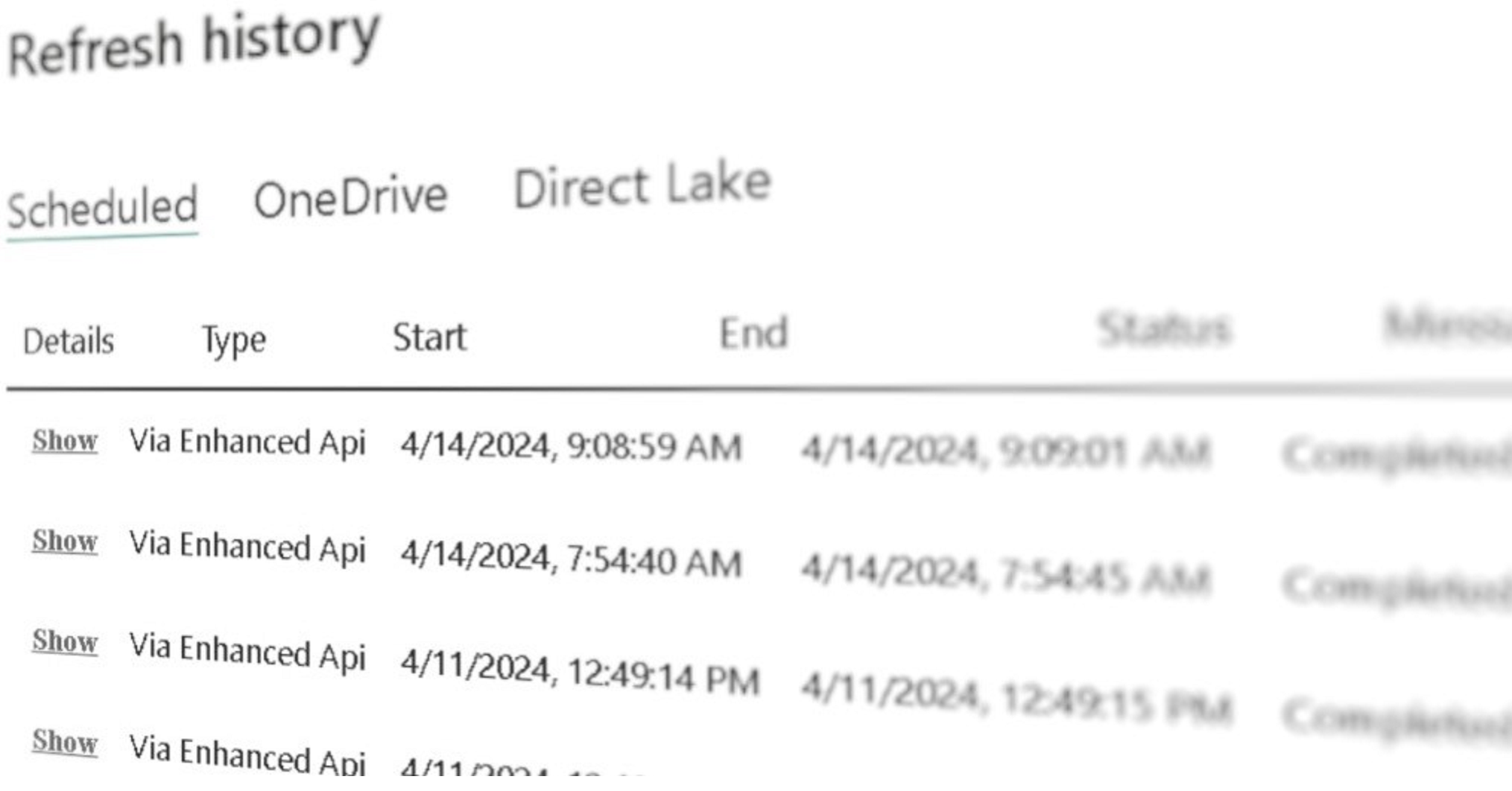

You can also check out the refresh history of the semantic model. There, it should display “Via Enhanced API” as the refresh type:

By the way, with this setup, I was able to refresh (rather reframe) single tables also in a Direct Lake semantic model. Note, you might need to reframe the model at least once in full mode e.g. with a manual “refresh” from the UI before the Enhanced API works. More about reframing semantic models in Direct Lake mode from Fabric data pipelines can be found in this article. For me, it did not work to reframe default semantic models neither with the Enhanced API nor with the new Semantic model refresh pipeline activity (error: PowerBIOperationNotSupported).

end.

This is it! Not too hard, I guess. As mentioned above, the API only worked for me with the Audience Uri https://analysis.windows.net/powerbi/api which we specify in the connection. Check out this blog article, if you are in need for Resource / Audience Uris for other Azure data sources / destinations. Finally, if you need to do the same exercise but from Azure Data Factory, make sure to check out this post.