how to reframe (“refresh”) your direct lake semantic model from fabric data pipelines.

introduction.

“Wait, what? Why would you want to refresh a Power BI semantic model in Direct Lake mode? I thought there was no need for such things with Direct Lake?”

Well, yes and no. A refresh in the classic sense of loading data as in import mode is not needed when using Direct Lake. With Direct Lake, changes in your underlaying OneLake (in fact, you need a Lakehouse or Warehouse provisioned on top of that) get reflected seamlessly in Power BI.

But there are actually cases, where you want to disable this feature of detecting changes in Direct Lake and automatically reflecting them in Power BI. There is even an explicit setting for this as explained in Microsoft’s Direct Lake documentation. There, they also provide us with a scenario on when you want to toggle on that setting:

“You may want to disable if, for example, you need to allow completion of data preparation jobs before exposing any new data to consumers of the model. When disabled, you can invoke refresh manually or by using the refresh APIs. Invoking a refresh for a Direct Lake model is a low cost operation where the model analyzes the metadata of the latest version of the Delta Lake table and is updated to reference the latest files in the OneLake.”

So, one use case of disabling this feature is when you do not want to expose the changes of your tables instantly to your consumers but rather only after all your tables have been successfully populated. Also, a refresh in Direct Lake does not mean loading (and actually duplicating) the data into Power BI as in import mode. Instead, a refresh just means that we tell Power BI to point itself to the latest version of the delta tables. In fact, because this is not a “refresh” in the classic sense, we refer to it as reframing instead. To invoke such reframing you can use the refreshes API or the new Semantic Model Refresh Pipeline activity. In this article here, will be using the refreshes API. We will fire the API from a Fabric Data Pipeline.

On the one hand, with this approach we gain more control of when and in which state data is exposed. The downside is that we loose the near-real time aspect of Direct Lake. Either way we still skip possibly long loading times since a Direct Lake refresh does not duplicate data and is a quick low cost operation.

Currently, a Direct Lake refresh also clears the whole cache of your semantic model. You might therefore want to consider pre-warming your semantic model as part of your refresh pipeline. As always when I write Fabric blog posts: It’s almost impossible to do this without linking to at least one of Chris Webb’s articles: Here, he explains a few more details on what a refresh means in the context of Direct Lake.

prerequisites.

1. A Fabric capacity and workspace

2. A Data pipeline

3. A semantic model in Fabric

4. A data connection to the Power BI REST API endpoint. Check out this blog post on how to do this.

plan of action.

1. What’s the goal?

As already explained in the introduction, we’d like to refresh (actually reframe) a semantic model in Direct Lake mode:

2. Build the pipeline

Once more, there is a new pipeline activity in Fabric Data Pipelines that refreshes (in direct lake mode: reframes) the whole semantic model. You can find out more in the documentation.

Again, if you’d like to use the Web activity, you first need to have a Power BI REST API connection in place. A walkthrough on how to set this up can be found here. Note, I found the refreshes API works with both authentication methods, a service principal and OAuth2.0. There are a few APIs on top of Direct Lake semantic models that (currently?) do not work with service principals. I elaborated a bit on this issue in the article on how to use the new Fabric REST APIs, too. Also at the time of writing this blog post, there are some limitations if you use the APIs on default semantic models. It could result in a PowerBIOperationNotSupported error.

We start by grabbing a web activity in our data pipeline and selecting the previously created web V2 connection from the dropdown. Then, we fill in the rest as per the picture below.

Relative URL: groups/[WorkspaceID]/datasets/[SemanticModelID]/refreshes (you can find the WorkspaceID and SemanticModelID in the URL bar of your browser by clicking on the semantic model in Fabric, similar to here)

Method: POST

Body:{}

Headers: content-type as Name and application/json as Value

Note, for the body, we chose to paste in just “{}”. With Azure Data Factory and Power BI Import Mode we were able to refresh individual tables and even partitions via the Enhanced API, simply by specifying those tables in the body. I actually tried to do exactly that, but although the API call sent back a successful response, it appears the reframing had not worked properly. This might be possible in the future though. Check out the appendix for how things looked for me at the time of writing this article. [Edit – 2024-04-15: I tried this once more, when I wrote the article about how to refresh individual tables or partitions in power bi semantic models from fabric data pipelines and it worked even with a model in Direct Lake! It could be that I used the wrong body in that call shown in the appendix or that one needs to reframe the model at least once as a full refresh]

3. Showtime

Before we execute the pipeline, let’s first untoggle the setting which keeps the Direct Lake data up to date, so we can validate whether our pipeline works as intended. Do not forget to click the Apply button after changing the configuration.

Now, let’s head over to our Power BI report referencing the semantic model. Currently, there are 23280 different cities in our dimension_city table:

Next, we add a new row to that table in our Lakehouse:

Going back to our report, we can see that we still have the same number of rows even after pressing the refresh button on the top:

Let’s run the pipeline:

Now, we can see the new city appeared in Power BI as well:

end.

Here we are! We now have more control about what and when changes shall be exposed to our end users in Power BI: Note, currently, the refreshes API also entirely clears the cache of the model. In fact, I used this when testing out the blog post about pre-warming the memory of the model. As Chris Webb states, this should change mid-term, so that reframing the model wouldn’t clear the cache entirely. Instead, Microsoft might be providing another API call for doing so. Also, in this approach here, we were using the Power BI refreshes call. I could imagine that there will be a dedicated Fabric API call for this in the future, too, but that is also just speculation.

appendix.

[Edit – 2024-04-15: I tried this once more, when I wrote the article about how to refresh individual tables or partitions in power bi semantic models from fabric data pipelines and it worked even with a model in Direct Lake! It could be that I used the wrong body in that call shown in the appendix or that one needs to reframe the model at least once with a full refresh]

Here is how I tried to reframe just a single table, by using the Enhanced API with {“objects”:[{“table”:”dimension_city”}]} in the body:

This is how the Output response looked like. It does not appear that anything went wrong:

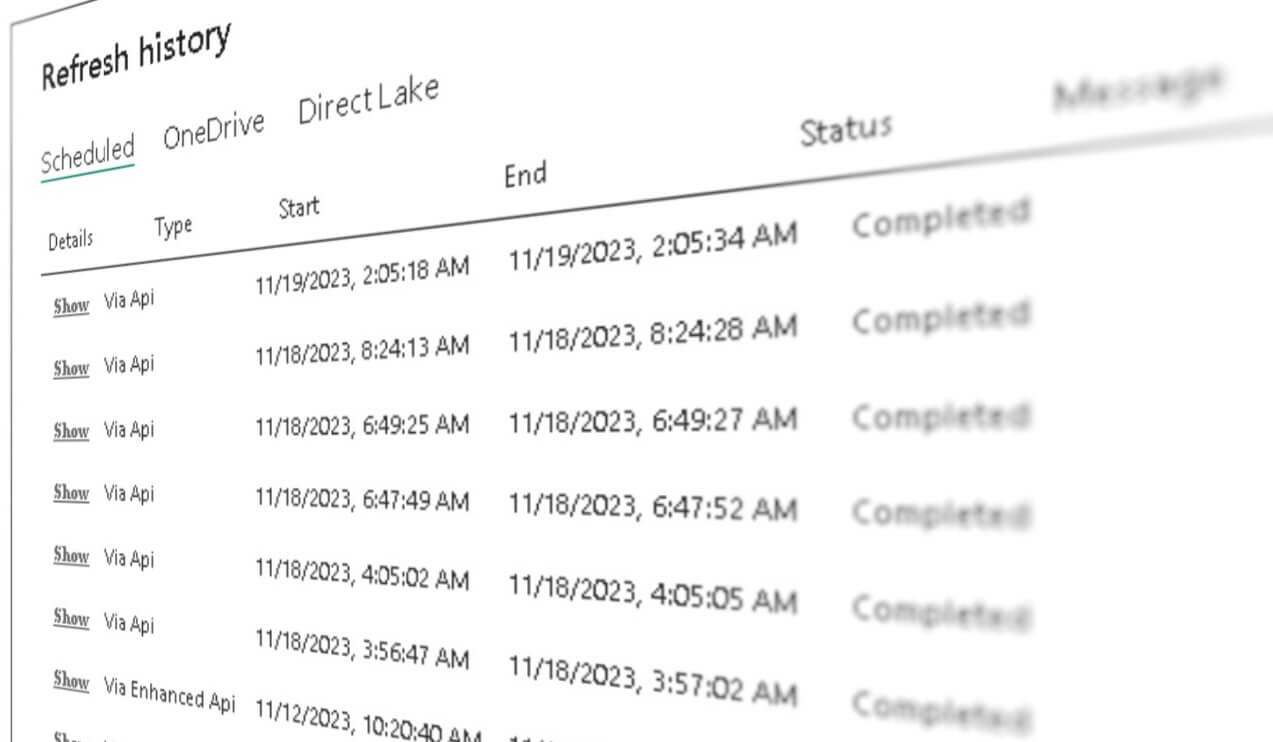

Also the refresh history looks good. It even correctly states that an Enhanced API has been Completed:

But when checking the Power BI report, the new row did not show up. Same when looking into the Last Refreshed Date on that table via SSMS. It still showed the last time we refreshed the whole model:

The same behaviour was observable, when processing a single table directly with the XMLA endpoint from SSMS.