how to get oauth2 access tokens for data sources via data factory to refresh power bi semantic models (former datasets).

introduction.

In the previous blog post, we changed the owner of a semantic model from a personal account to a service principal. The credentials of this service principal are used to fetch tokens with which Power BI is able to authenticate to the data sources in order to ultimately pull data. Due to security reasons, this token will expire after a certain time forcing us to refresh the token regularly. If you are using data factory as your ETL tool, you could actually update the token right before triggering your semantic model refresh: A “just in time token refresh”, so to speak. In the following, we utilise data factory to first fetch a token for a data source (an Azure SQL Database in this example, although it also works for other audiences) in order to update the data source connection in Power BI with that exact token. Right after, we trigger a Power BI semantic model refresh from the same pipeline. Please note, we exclusively use API calls here, which you can call from any other client. For further inspiration, you can look into the Powershell script here (from line 43, an access token is fetched with which the data source is updated). Either way, you need to be able to first authenticate as the Power BI semantic model owner, though. In the following, as well as in the referred blog post, we impersonate a service principal owning the semantic model.

prerequisites.

plan of action.

1. What is our starting point?

Here a quick overview on what already is in place.

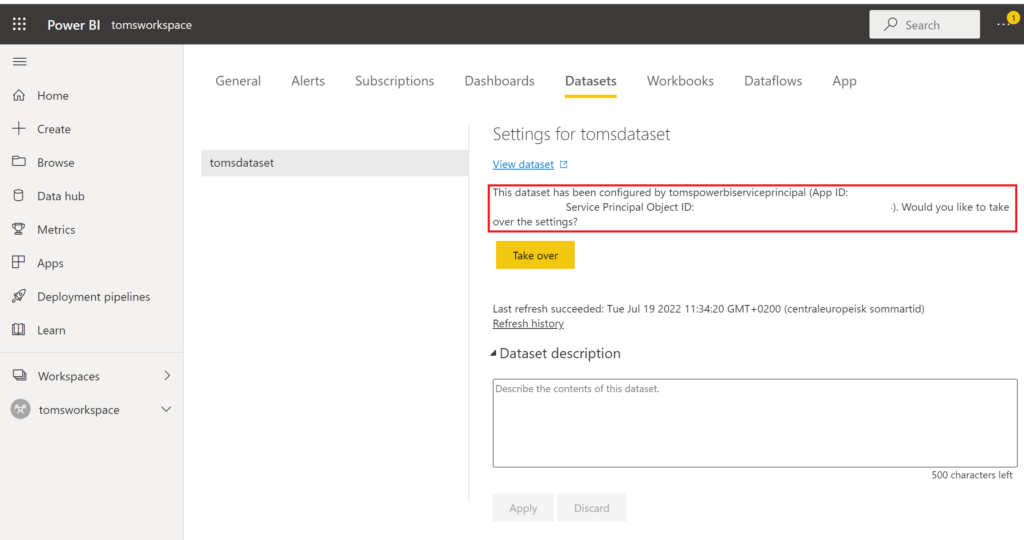

a. A Power BI semantic model tomsdataset with an Azure SQL Database as its data source. The owner of the semantic model is the service principle tomspowerbiserviceprincipal:

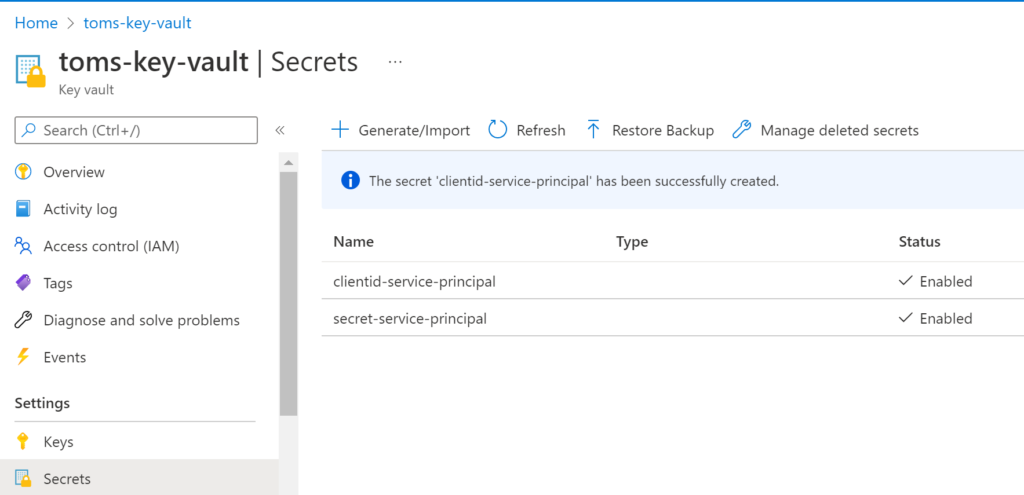

b. A key vault toms-key-vault with the secrets clientid-service-principal and secret-service-principal:

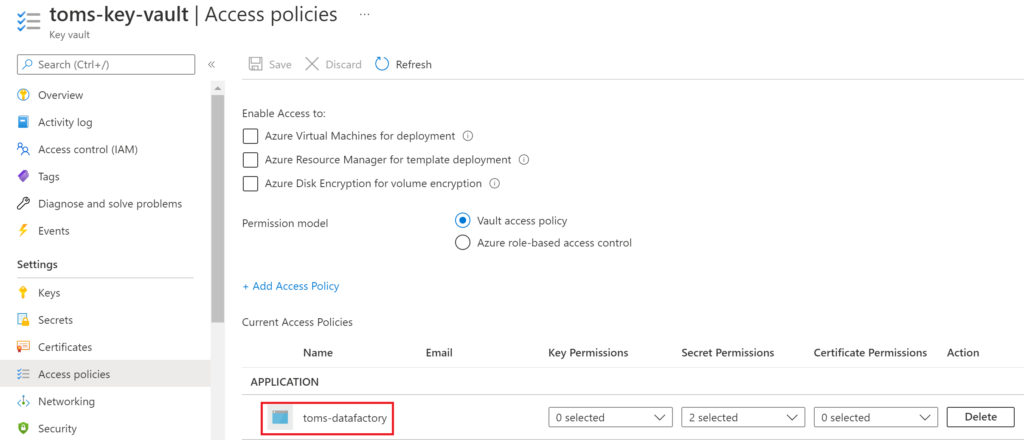

c. An access policy for the key vault that allows data factory to get and list secrets:

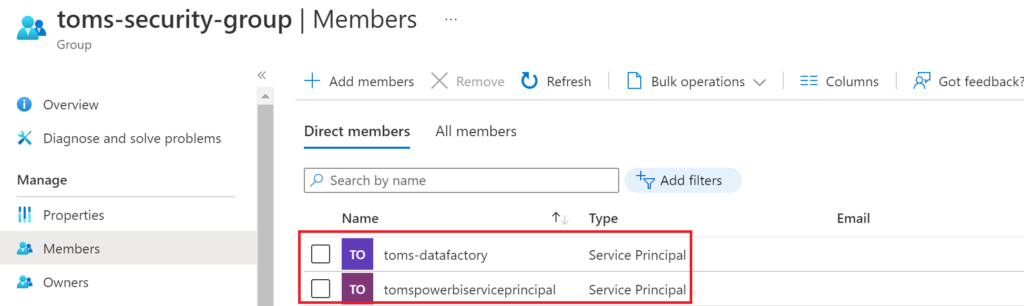

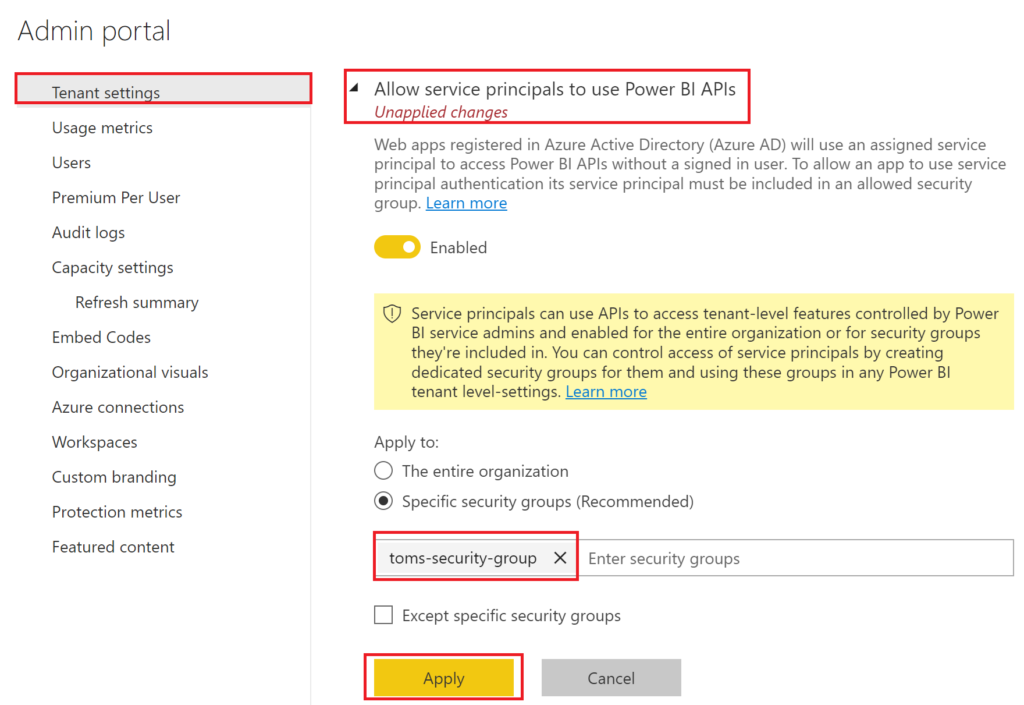

d. A security group toms-security-group that contains the data factory and the semantic model owning service principal as members. The security group needs to be allowed to use Power BI APIs, too.

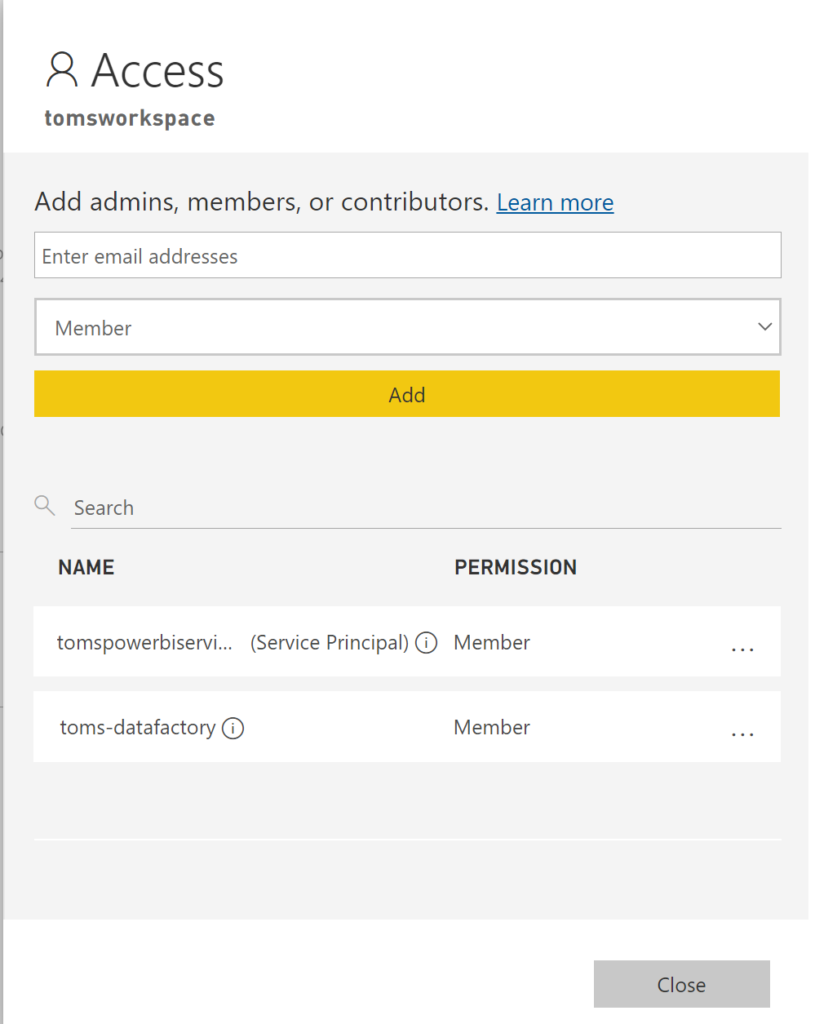

e. An access policy granting member access to the workspace tomsworkspace for the service principal tomspowerbiserviceprincipal and the data factory :

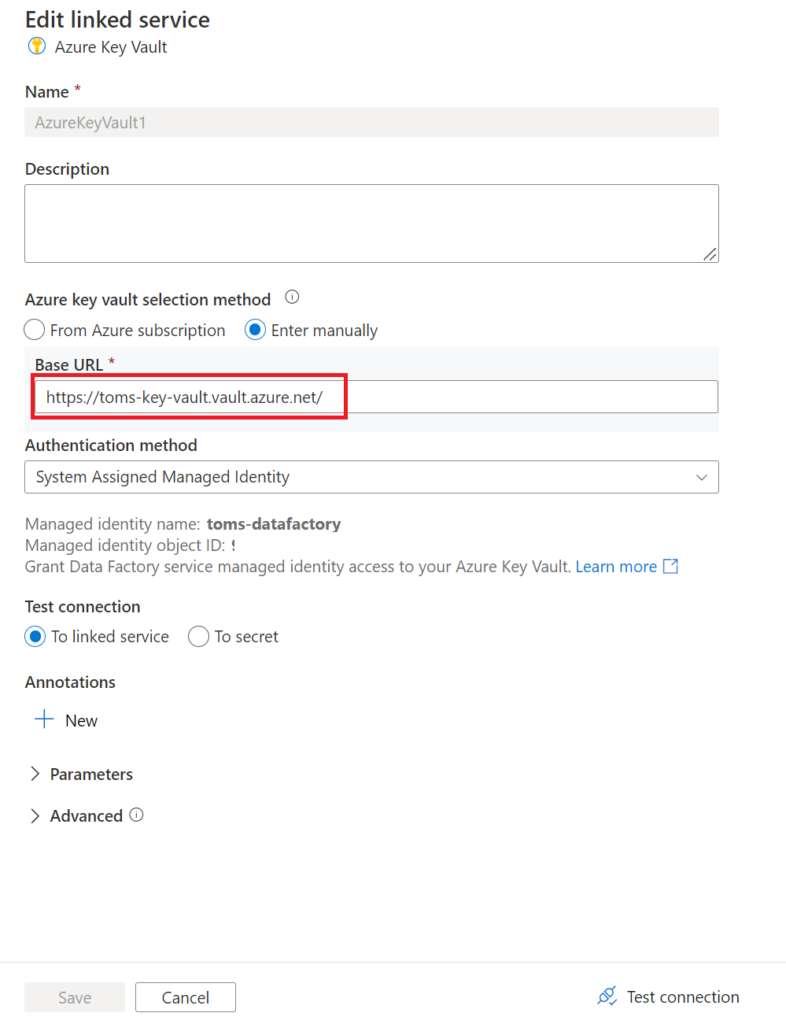

f. An Azure Key Vault linked service connection in data factory:

2. Create the data factory pipeline

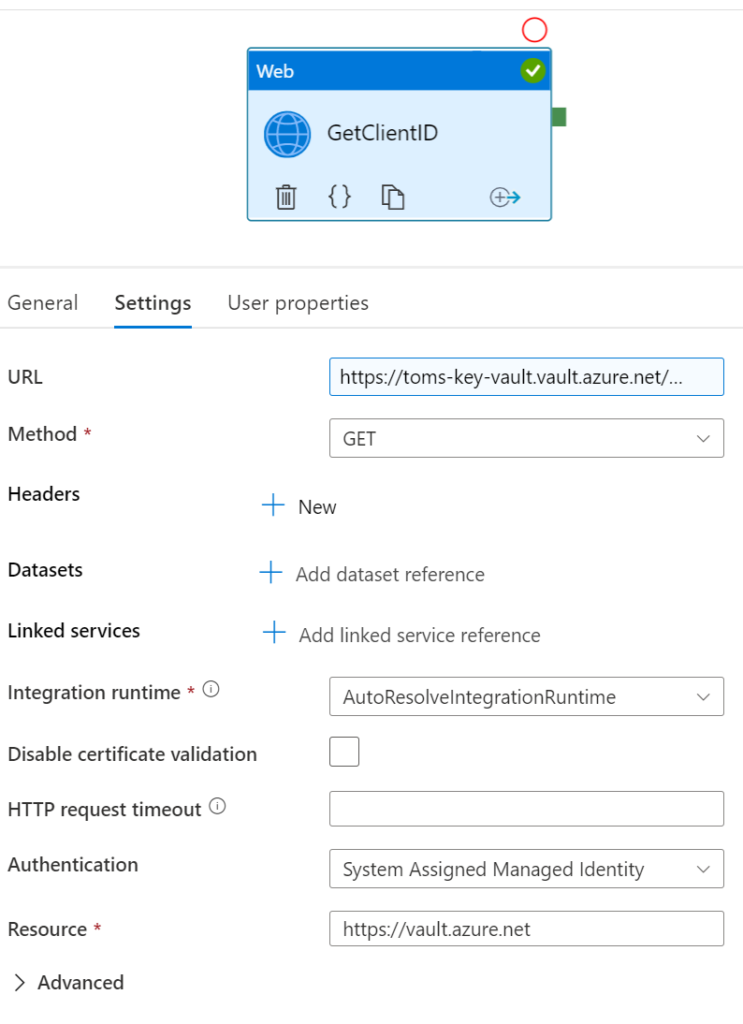

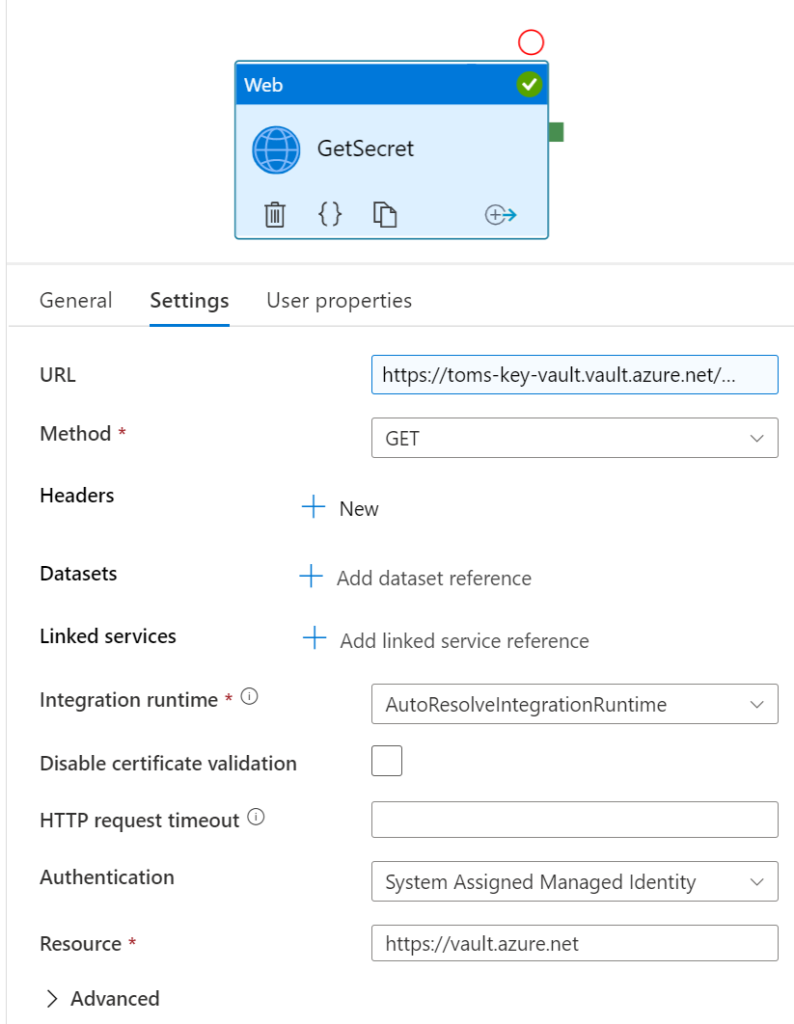

a. We start with two tasks retrieving the clientid and secret for the service principal from the Azure Key Vault.

The URL:

https://toms-key-vault.vault.azure.net/secrets/clientid-service-principal?api-version=2016-10-01

Both toms-key-vault and clientid-service-principal you probably need to adapt

The Method is Get

Pick System Assigned Managed Identity as Authentication

Lastly, set Resource to

https://vault.azure.net

The URL:

https://toms-key-vault.vault.azure.net/secrets/secret-service-principal?api-version=2016-10-01

Both toms-key-vault and secret-service-principal you probably need to adapt

The Method is Get

Pick System Assigned Managed Identity as Authentication

Lastly, set Resource to

https://vault.azure.net

Note, make sure to tick the boxes secure input / output when configuring the tasks:

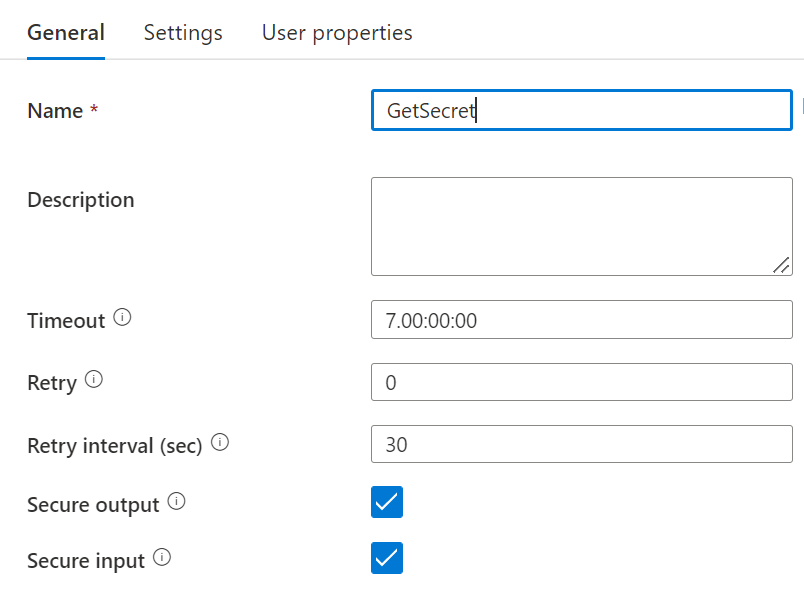

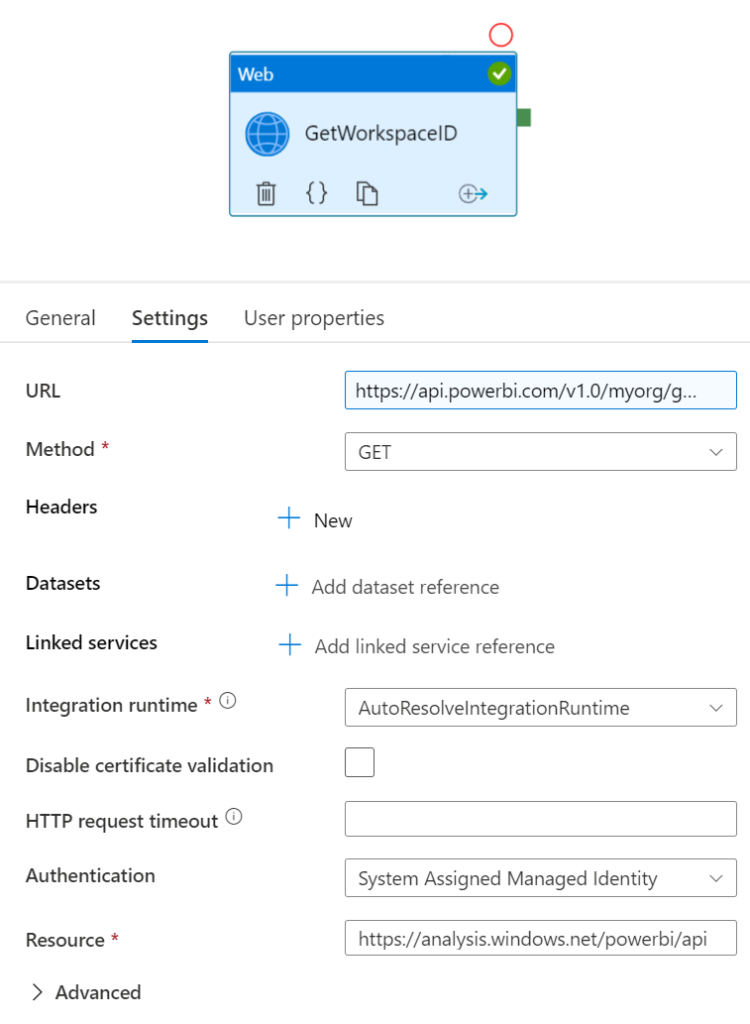

b. Next, we would like to get all data sources for the semantic model. In our example, we only have one data source. You will notice that we have a few tasks for this matter: One for getting the workspace, another one for the semantic model and a final one for the data source itself. Obviously, you could specify everything in only one task. In this pipeline, however, we try to provide a template that is rather easy to scale as with this layout, you could refresh all data sources in all semantic models within one workspace. In order to do so, consider looping over the output of GetAllDatasets and GetAllDatasources.

The URL:

https://api.powerbi.com/v1.0/myorg/groups?$filter=name eq 'tomsworkspace'

tomsworkspace is most likely to be substituted with the name of your Power BI workspace.

The Method is Get

Pick System Assigned Managed Identity as Authentication

Lastly, set Resource to

https://analysis.windows.net/powerbi/api

The URL follows this pattern:

@concat

The Method is Get

Pick System Assigned Managed Identity as Authentication

Lastly, set Resource to

https://analysis.windows.net/powerbi/api

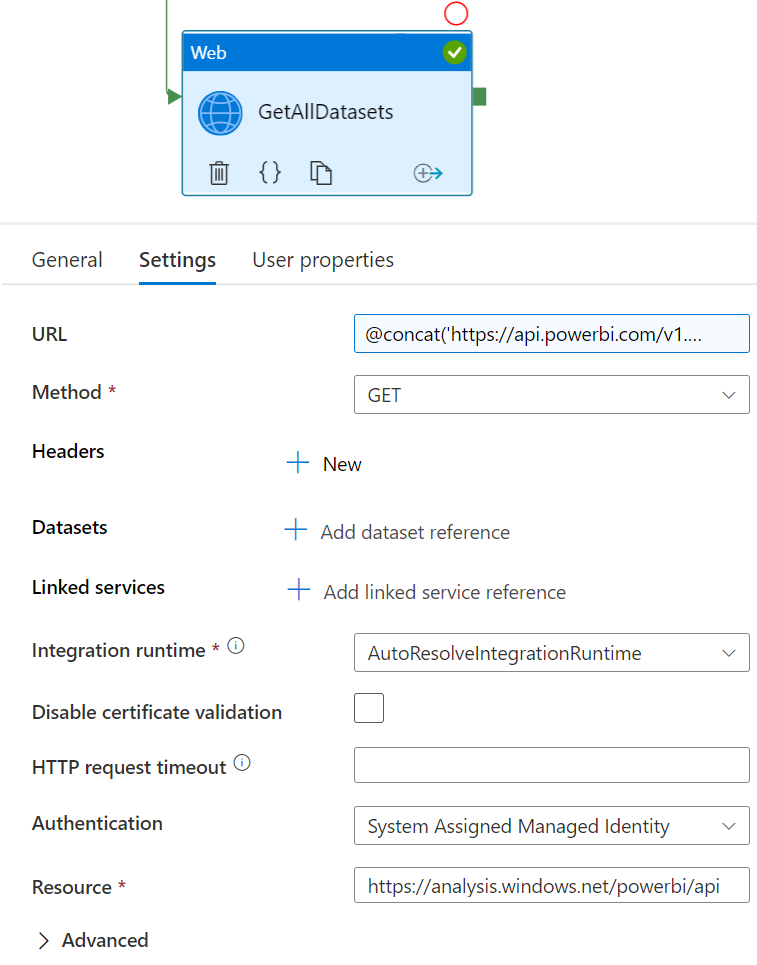

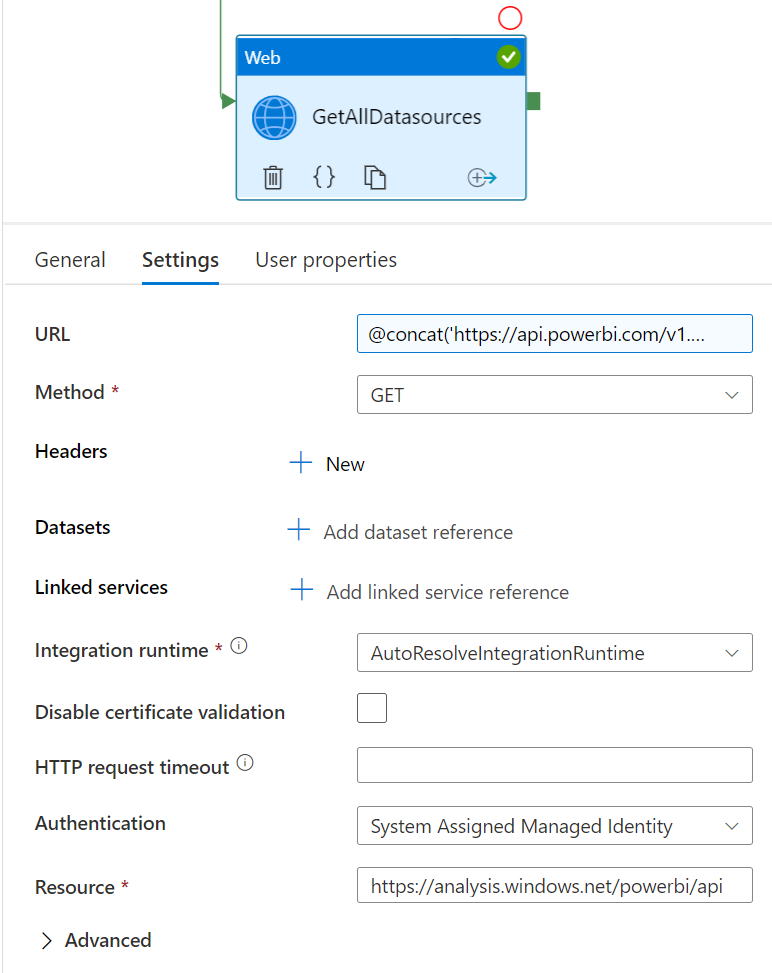

The URL follows this pattern:

@concat('https://api.powerbi.com/v1.0/myorg/groups/', activity('GetWorkspaceID').output.value[0].id, '/datasets/', activity('GetAllDatasets').output.value[0].id, '/datasources')

The Method is Get

Pick System Assigned Managed Identity as Authentication

Lastly, set Resource to

https://analysis.windows.net/powerbi/api

c. Now, we have everything in place to get the access token (in this example for an Azure SQL Database) and update the credentials. Like before, make sure to tick the secure input / output box when fetching and forwarding the access token to the Power BI Service.

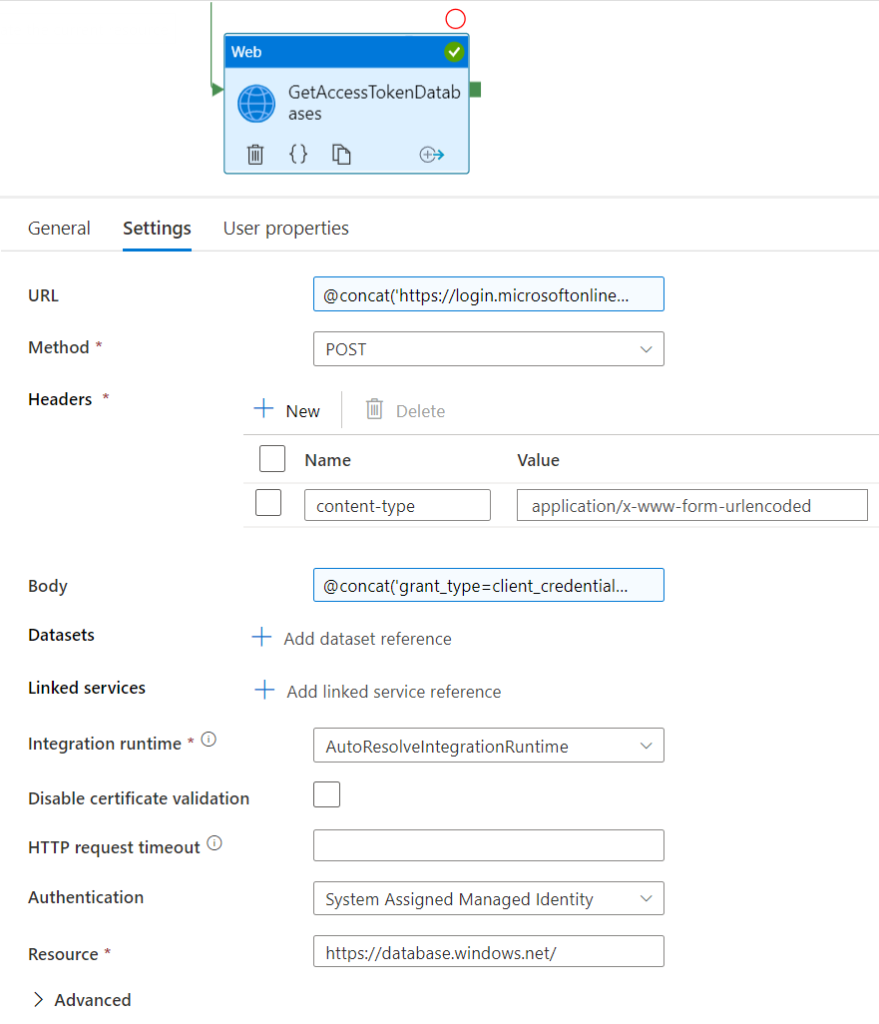

The URL:

@concat ('https://login.microsoftonline.com/[tenantID]/oauth2/token')

The tenantID you can find under tenant properties in the Azure portal

We also need a Header with the name content-type and value

application/x-www-form-urlencoded

The Method is Post

Paste the following into the Body

@concat ('grant_type=client_credentials&client_id=', activity('GetClientID').output.value, '&client_secret=', replace(activity('GetSecret').output.value, '+', '%2B'), '&resource=https://database.windows.net')

Pick System Assigned Managed Identity (Legacy: Managed Identity) as Authentication

Lastly, set Resource to

https://database.windows.net

The URL:

@concat ('https://api.powerbi.com/v1.0/myorg/gateways/', activity('GetAllDatasources').output.value[0].gatewayid, '/datasources/', activity('GetAllDatasources').output.value[0].datasourceid)

We also need a Header with the name content-type and value

application/json

The Method is Patch

Paste the following into the Body

@concat ('{"credentialDetails" : { "credentialType" : "OAuth2", "credentials" : "{"credentialData":[{"name":"accessToken", "value":"',activity('GetAccessTokenDatabases').output.access_token , '"}]}", "encryptedConnection" : "Encrypted", "encryptionAlgorithm" : "None", "privacyLevel" : "None"}}')

Pick Service Principal as Authentication

The tenant (TenantID) you can find under tenant properties in the Azure portal

Service Principal ID

@activity('GetClientID').output.value

Lastly, set Resource to

https://analysis.windows.net/powerbi/api

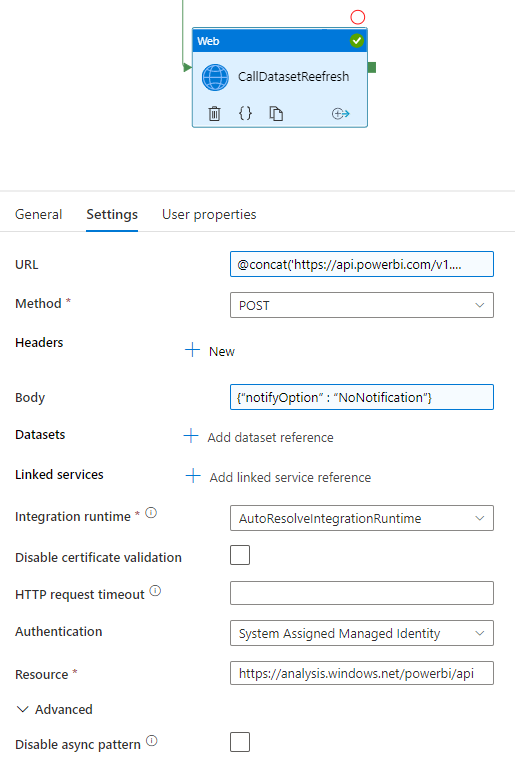

d. Lastly, we just need to refresh the semantic model with the new token.

The URL:

@concat ('https://api.powerbi.com/v1.0/myorg/groups/', activity('GetWorkspaceID').output.value[0].id, '/datasets/', activity('GetAllDatasets').output.value[0].id, '/refreshes')

Both the workspaceID and the datasetID can be copied from the address / URL bar by clicking onto the dataset in Power BI Service.

The Method is Post

Paste the following into the Body

{"notifyOption" : "NoNotification"}

Pick System Assigned Managed Identity (Legacy: Managed Identity) as Authentication

Lastly, set Resource to

https://analysis.windows.net/powerbi/api

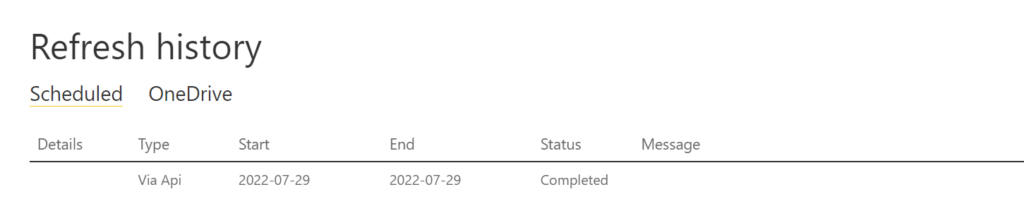

3. Showtime!

If configured everything like above, the pipeline should run succesfully. Note, the last task is an asynchronous API call meaning it just triggers a semantic model refresh. We do not know, when and whether the semantic model refreshment is finished. One way to work around this issue is to ask for the status of the refresh after a certain time (use same api call but with the GET method). If necessary combine it with an until loop task (until status is not “executing”).

end.

If you need to fetch tokens for other audiences / resources than Azure SQL, check out this one here. And if you’d like to change the data source to another type of credentials (i.e. anonymous or basic), then this Microsoft’s documentation is a good resource. Otherwise, enjoy your secure and automated Power BI semantic model refreshes!

– Hi, do we need to set OAuth in the Power BI dataset credentials (Authentication method) as well because this does not seem to work for me on the (Update dataset credentials) step.

– Also, how to get the Dataset Id if we pass a specific dataset name for a Workspace.

Hi Amit!

Thanks for reaching out! :)

Yes, you need to give Power BI the instruction to use the oauth method to authenticate to your data source(s). Please note, in the example above it is a service principal which owns the dataset. This service principal is then used by data factory to first fetch the oauth token from AAD. Afterwards, the Power BI dataset connection is updated with that exact token. To find out more, I recommend checking out this blog post

The DatasetID can be extracted from the URL. Just go to https://app.powerbi.com/ and navigate to your workspace. Then, click on the dataset and your URL should look similar to https://app.powerbi.com/groups/YOURWORKSPACEID/datasets/YOURDATASETID/details.

So, if you want to make your pipeline less dynamic than the blog post suggests, just use that exact URL for your API calls.

Hope this helps!

/Tom