how to refresh power bi semantic models (former datasets), tables and partitions with azure functions.

introduction.

There are plenty of ways to trigger a semantic model refresh in Power BI. For instance, you could set up a schedule refresh in the service directly, use a Logic App or Azure Data Factory, utilising the REST API or even the XMLA endpoint. In fact, we have provided two blog posts using the “refreshes” REST API to process both a whole semantic model and individual tables and even partitions with Azure Data Factory and its managed identity. In this blog post, however, we will be using an Azure Function to achieve the same. Likewise, we are going for the function’s managed identity for easy authentication.

.

prerequisites.

1. A Power BI premium capacity or a PPU license. XMLA endpoints can only be used on premium workspaces

2. A semantic model published to a premium workspace

3. Permissions to create and manage Azure Functions as well as the right to change settings in Power BI’s Admin portal

.

plan of action.

1. What is our starting point?

2. Create the Azure Function

3. Fix Power BI access and settings

4. Showtime!

.

1. What is our starting point?

Let’s quickly recap the prerequisites.

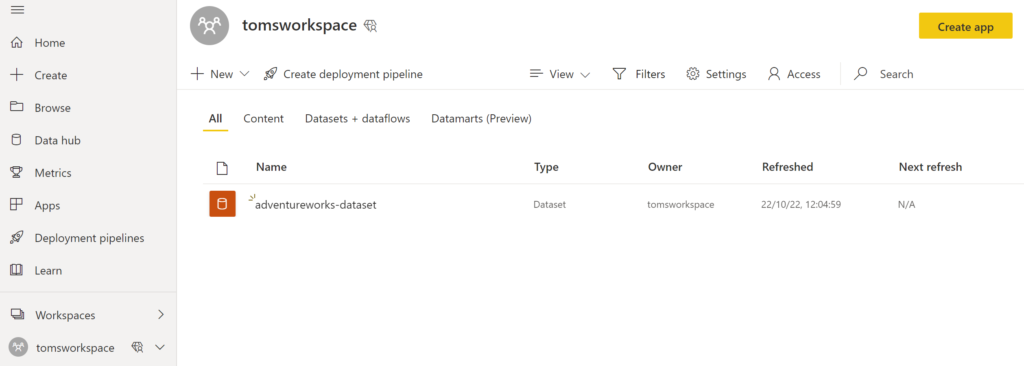

. A premium workspace in Power BI with a semantic model:

.

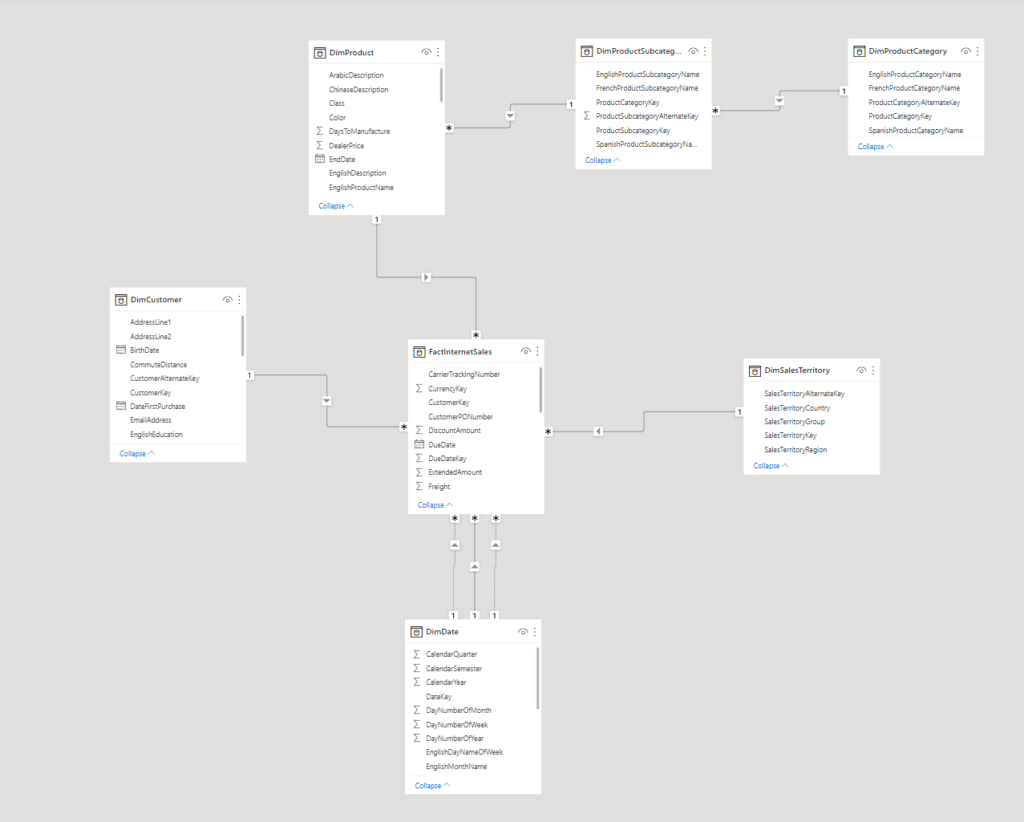

Our model looks like this:

.

We make use of the large AdventureWorks sample in an Azure SQL Database. If you would like to find out how to add the real AdventureWorks sample (and not the lightweight version) to an Azure SQL database, make sure to check out this blog post.

.

2. Create the Azure Function

We start by creating the resources. For this, go into Azure and browse for Azure Functions on the Marketplace:

.

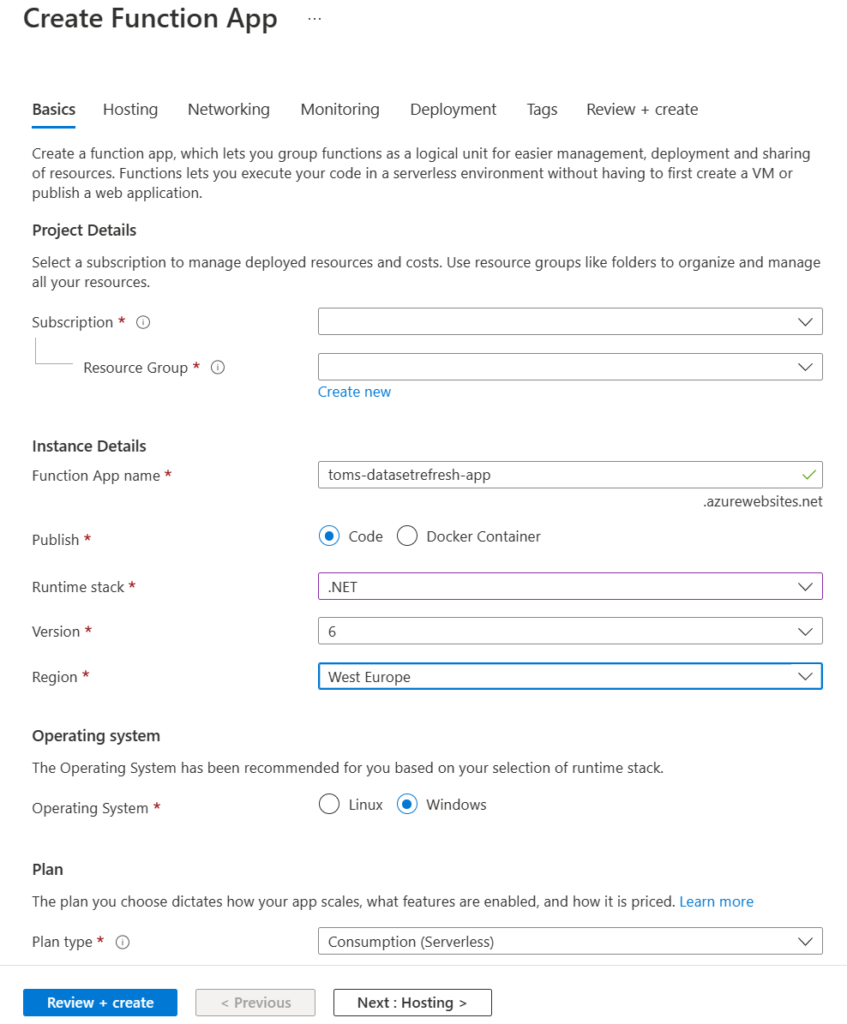

Choose the following settings for the Function App:

.

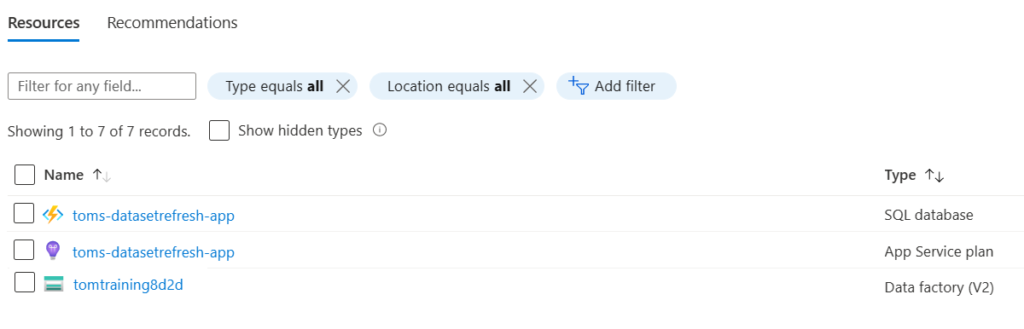

When the resources have been created, Azure should provided you with more than one resource: A Storage Account, an App Service Plan and the Function App:

.

The storage account is needed for logging purposes as well as managing triggers. The app service plan resource, on the other hand, contains the hosting plan and provides some additional functionality, such as VNet capabilities. Lastly, the Function App contains the code that shall be run. In the context of this blog post, we solely focus on the Function App toms-datasetrefresh-app.

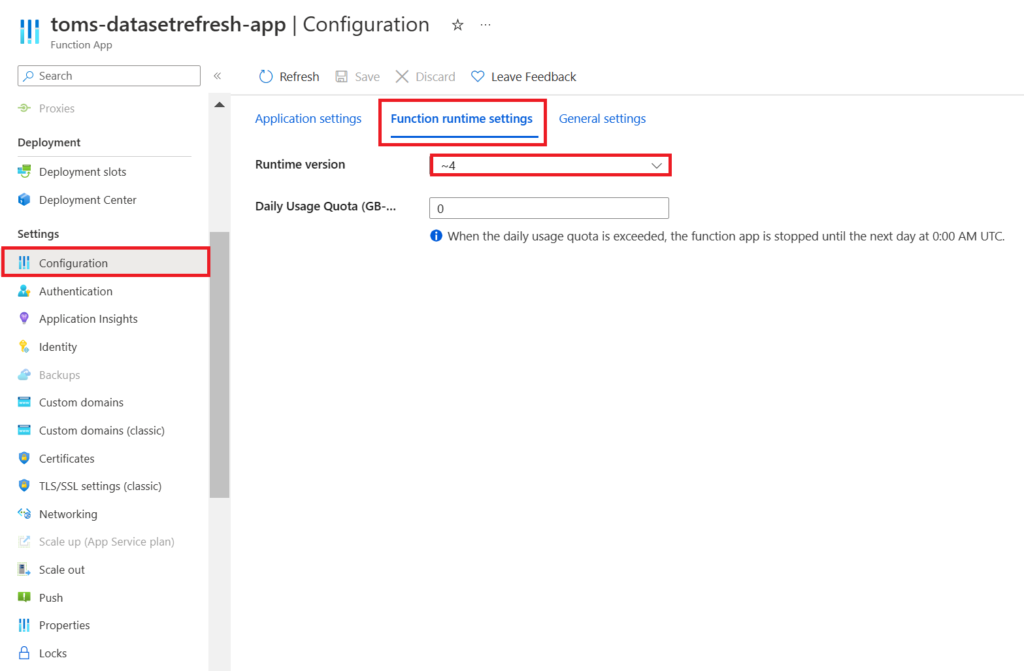

Make sure you are using Runtime version 4:

.

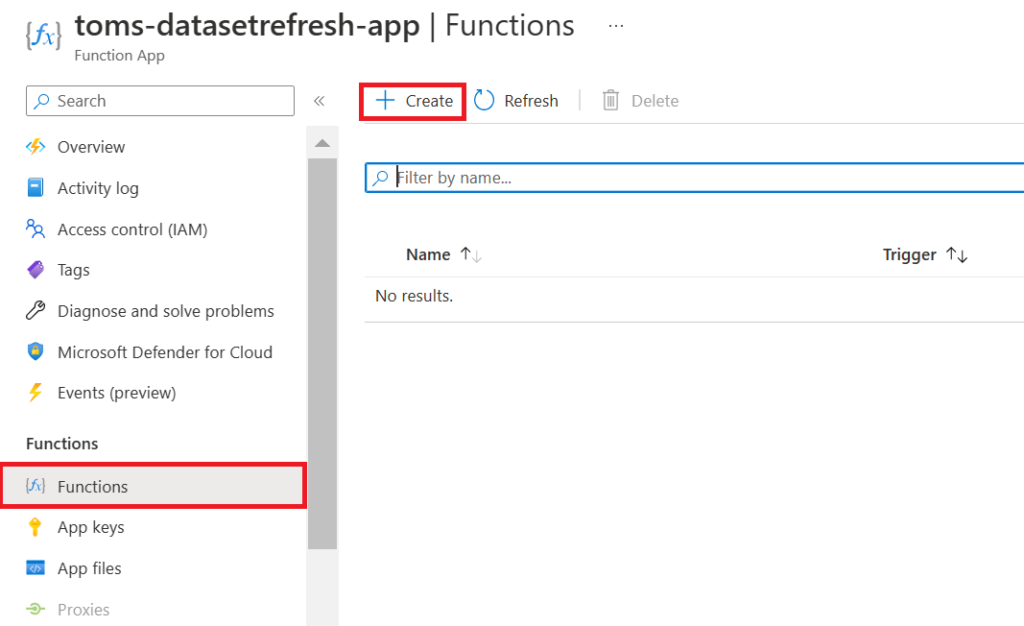

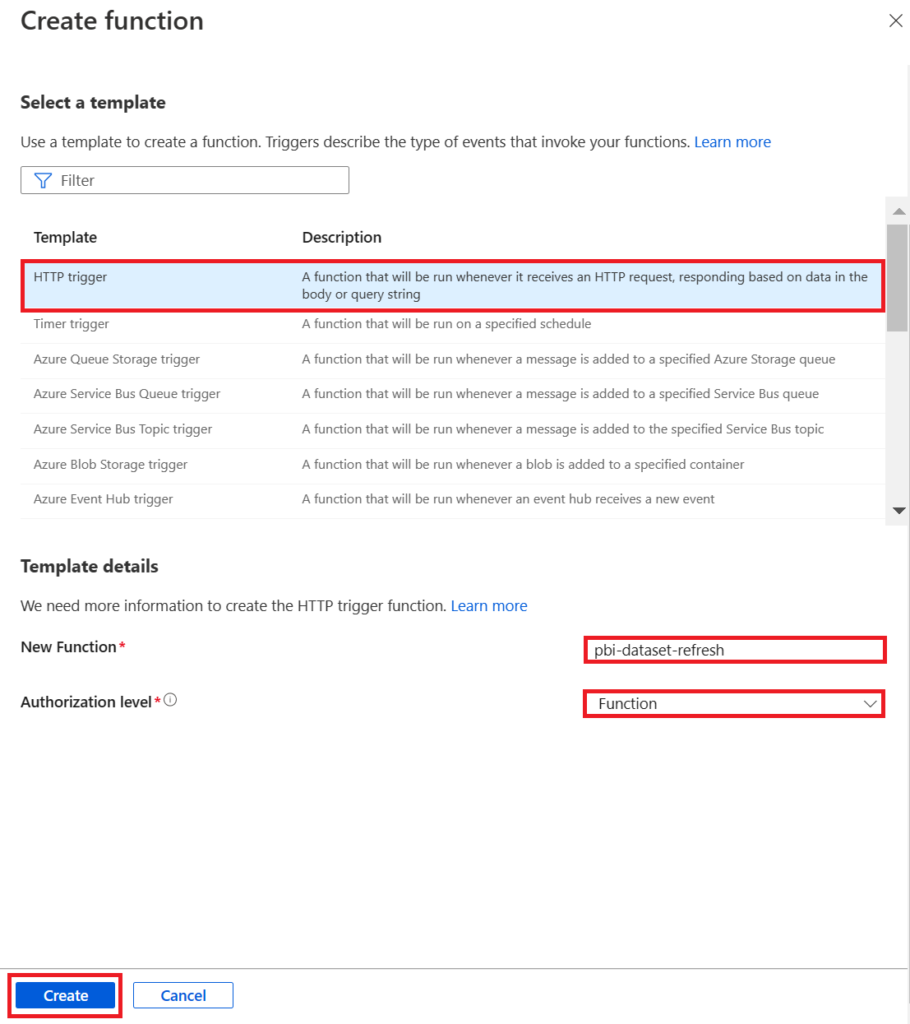

Now, click Functions, press Add and select the HTTP trigger sample.

.

Give the function a reasonable name, pick Function as Authorization level and press Create Function.

.

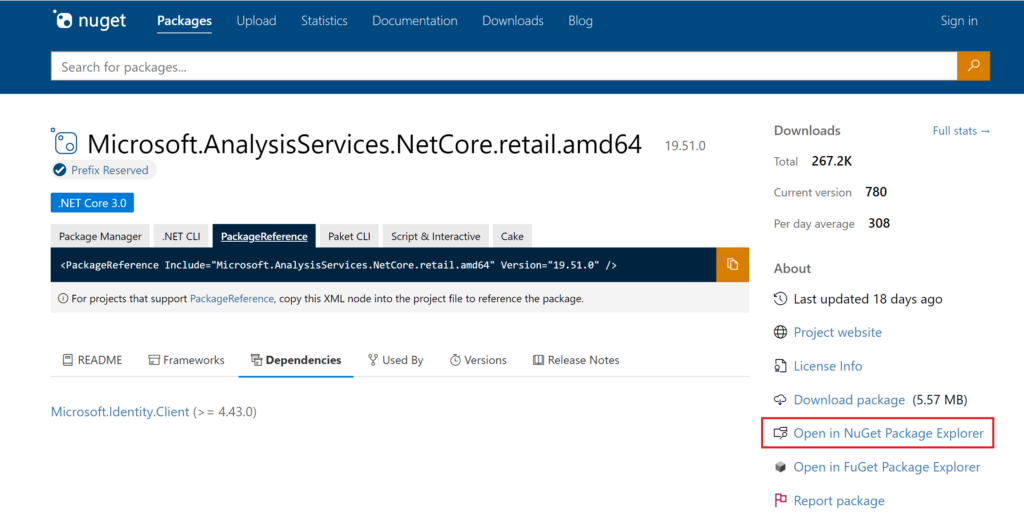

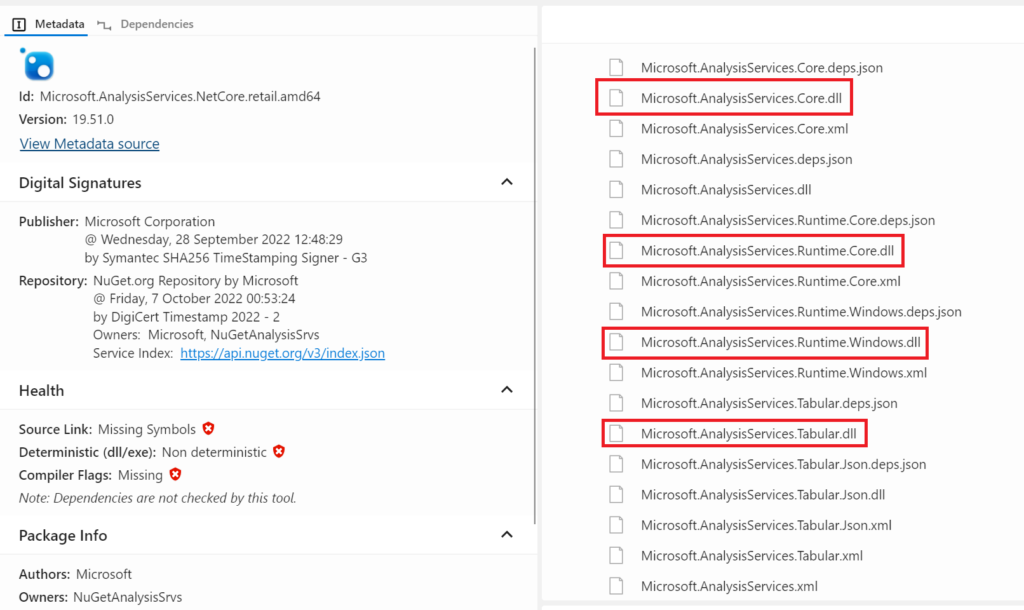

Before we create the code in the function, we need to add the ddl assemblies Microsoft.AnalysisServices.Tabular.dll, Microsoft.AnalysisServices.Core.dll, Microsoft.AnalysisServices.Runtime.Core.dll, Microsoft.AnalysisServices.Runtime.Windows.dll Azure.Identity.dll and Azure.Core.dll from the Analysis Services Client Libraries. Download the Analysis Services packages from here by opening Nuget Package Explorer:

.

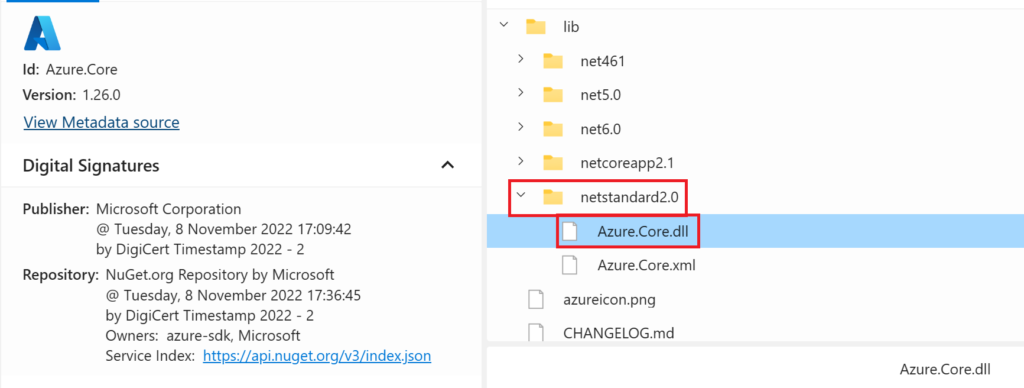

Open first the lib folder, then the netcoreapp3.0 and scroll down to find the dll packages:

.

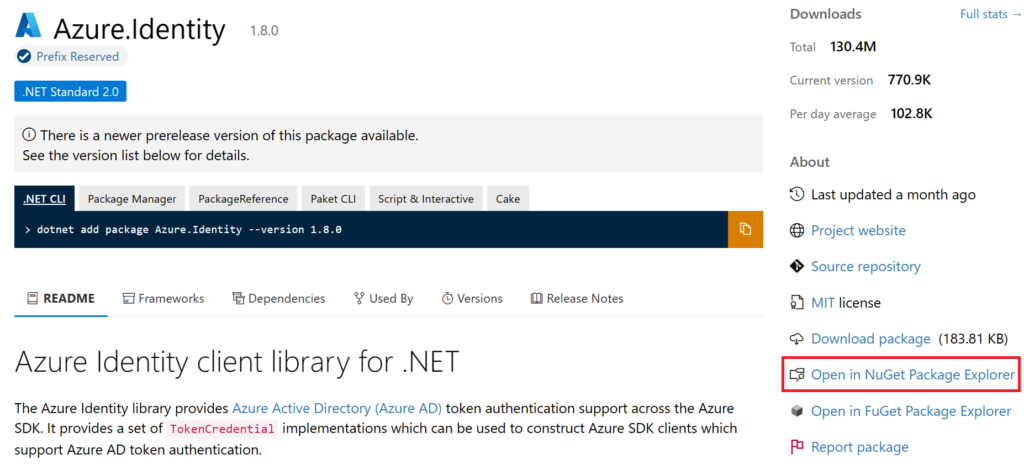

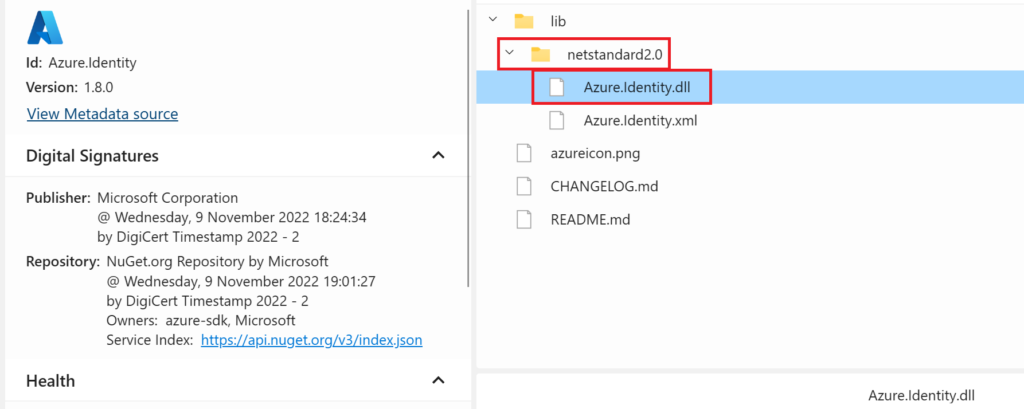

For the Azure.Identity.dll, use this Nuget link, open Nuget Package Explorer and download the package:

.

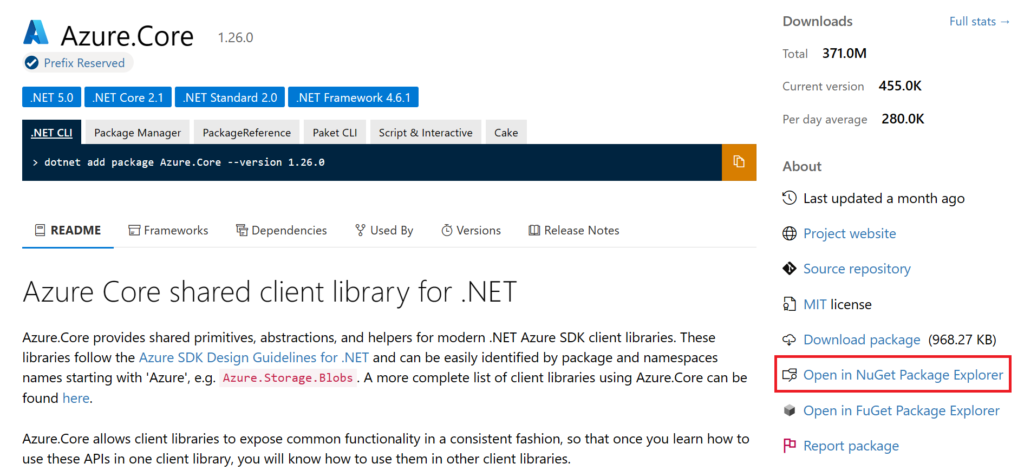

And for the Azure.Core.dll, use this Nuget link, open Nuget Package Explorer and download the package:

.

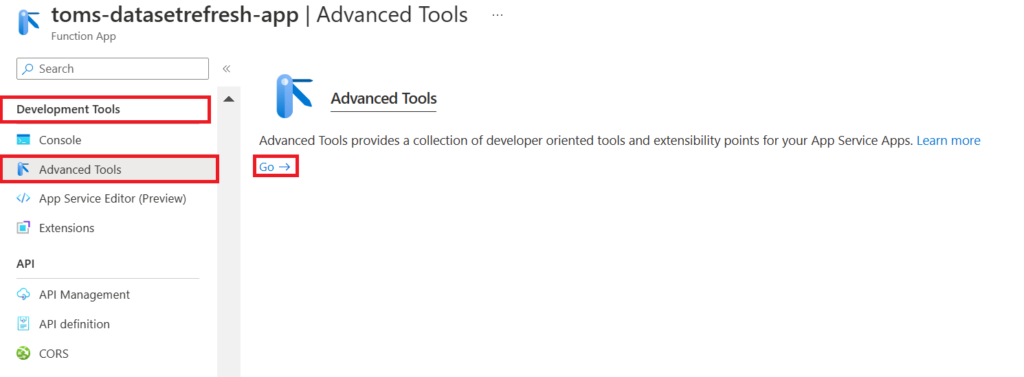

Afterwards, go to Advanced Tools under the Development Tools ribbon and click Go.

.

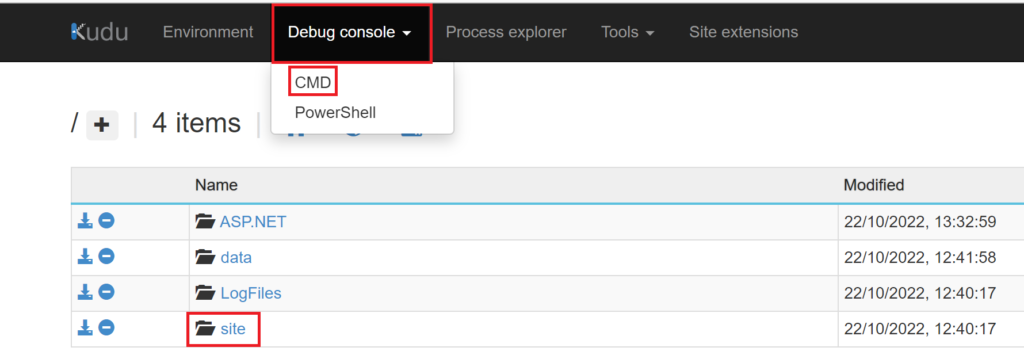

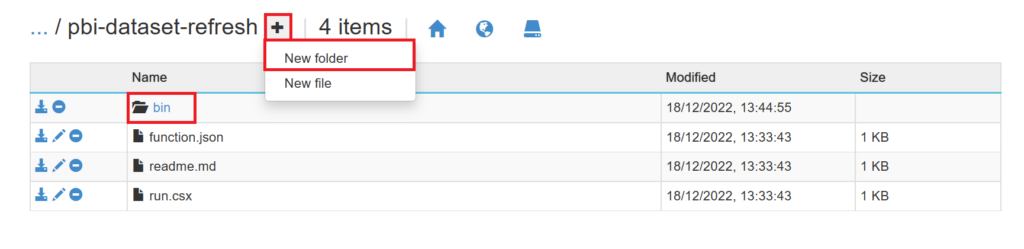

Under Debug console, press CMD and navigate to site > wwroot > YourFunction (pbi-dataset-refresh)

.

Then, create a New folder called “bin”

.

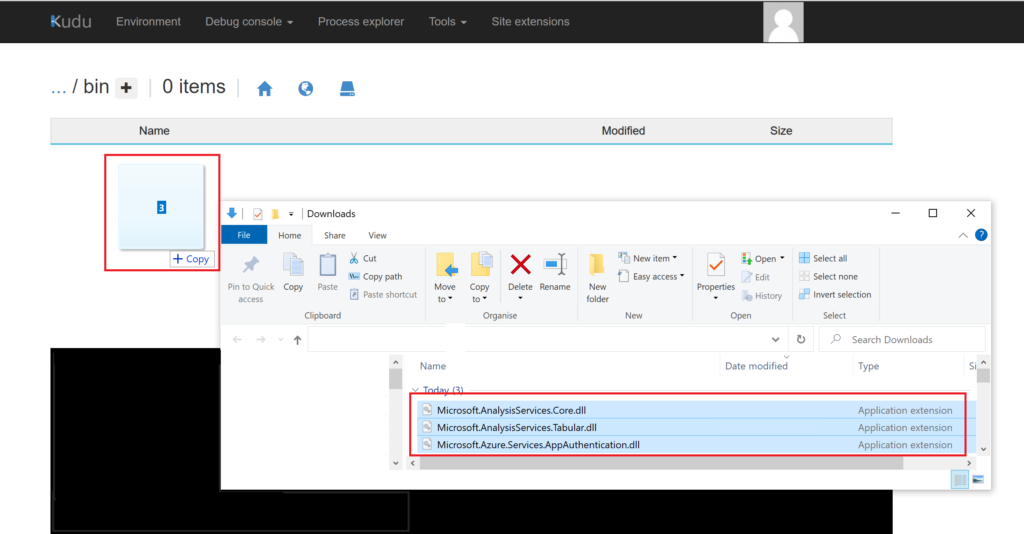

Open the new folder and drag & drop the files Microsoft.AnalysisServices.Runtime.Windows.dll, Microsoft.AnalysisServices.Tabular.dll, Microsoft.AnalysisServices.Core.dll, Microsoft.AnalysisServices.Runtime.Core.dll, Azure.Identity.dll and Azure.Core.dll into the folder:

.

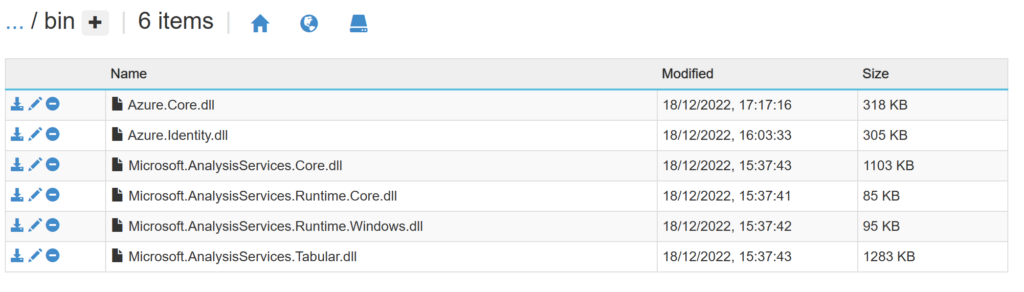

In the end, it should look like the following screen:

.

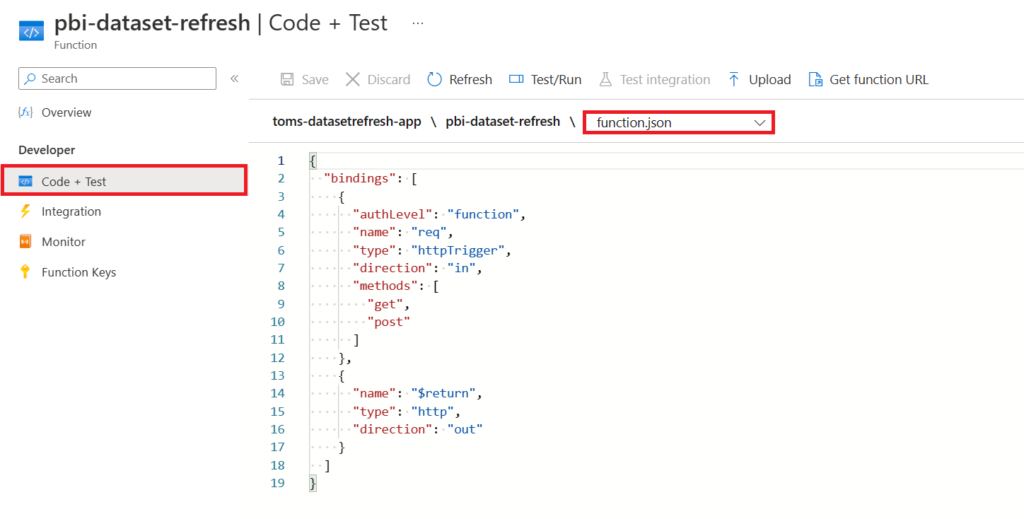

Now, navigate back to the function. The function.json file shall look like the following. This is the default code if you chose the HTTP sample before.

{

"bindings": [

{

"authLevel": "function",

"name": "req",

"type": "httpTrigger",

"direction": "in",

"methods": [

"get",

"post"

]

},

{

"name": "$return",

"type": "http",

"direction": "out"

}

]

}

.

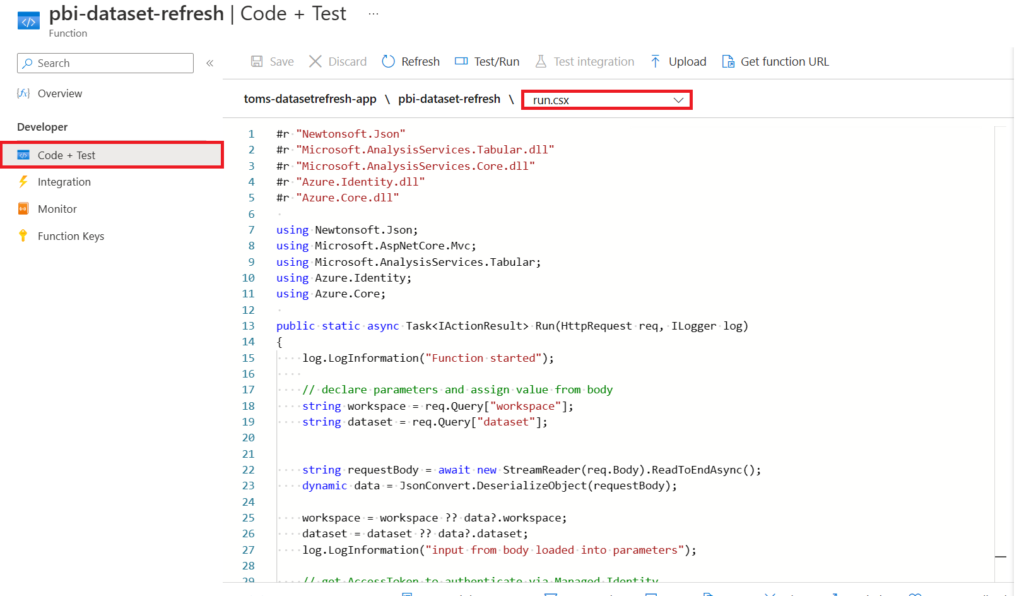

Next, select run.csx and add the code that actually does the work:

#r "Newtonsoft.Json"

#r "Microsoft.AnalysisServices.Tabular.dll"

#r "Microsoft.AnalysisServices.Core.dll"

#r "Azure.Identity.dll"

#r "Azure.Core.dll"

using Newtonsoft.Json;

using Microsoft.AspNetCore.Mvc;

using Microsoft.AnalysisServices.Tabular;

using Azure.Identity;

using Azure.Core;

public static async Task<IActionResult> Run(HttpRequest req, ILogger log)

{

log.LogInformation("Function started");

// declare parameters and assign value from body

string workspace = req.Query["workspace"];

string dataset = req.Query["dataset"];

string requestBody = await new StreamReader(req.Body).ReadToEndAsync();

dynamic data = JsonConvert.DeserializeObject(requestBody);

workspace = workspace ?? data?.workspace;

dataset = dataset ?? data?.dataset;

log.LogInformation("input from body loaded into parameters");

// get AccessToken to authenticate via Managed Identity

var tokenCredential = new DefaultAzureCredential();

var resource = "https://analysis.windows.net/powerbi/api";

var token = await tokenCredential.GetTokenAsync( new TokenRequestContext( new string[] { $"{resource}/.default" }));

log.LogInformation("AccessToken fetched from https://analysis.windows.net/powerbi/api");

// build connection string + connect to the Power BI workspace

string connStr = $"DataSource=powerbi://api.powerbi.com/v1.0/myorg/{workspace};Password={token.Token};";

Server pbiserver = new Server();

pbiserver.Connect(connStr);

log.LogInformation("Connection to Power BI server established");

// define variables

Database db = pbiserver.Databases.GetByName(dataset);

Model m = db.Model;

log.LogInformation("Power BI objects saved in variables");

// start refresh

m.RequestRefresh(RefreshType.DataOnly);

m.SaveChanges();

log.LogInformation("Power BI dataset refresh triggered");

// disconnect from Power BI service

pbiserver.Disconnect();

log.LogInformation("Disconnected from Power BI service ");

// return response in JSON format

string outputResponse =

@"{

""workspace"": """ + workspace + @""",

""dataset"": """ + dataset + @""",

""response"": ""triggered refresh for dataset " + dataset + " in workspace " + workspace + @"""

}";

return new OkObjectResult(outputResponse);

}

.

So, what is the script exactly doing? At the very start, the script refers to the TOM assemblies followed by taking in the parameters provided in the request body. Here a short clarification for each of them:

workspace = name of Power BI workspace

dataset = name of dataset

After that, we fetch an access token from https://analysis.windows.net/powerbi/api. Interesting enough, this token comes from the powerbi/api but also works for the XMLA endpoint. This is quite a cool one, because you could use the same token to do different things using different endpoints. This Microsoft documentation explains the phenomenon in more detail (see paragraph Authenticating with an Azure AD access token). Anyway, the script uses the token to authenticate and connect to Power BI (with the Azure.Identity.dll package). Afterwards, it saves the objects database and model variables and kickstarts the semantic model refresh. Note, in the script above we only refresh the data (RefreshType: DataOnly). To find out about the other refresh types, check out this Microsoft documentation. Finally, the script disconnects from the Power BI service and returns a message in JSON format. Also, if you’d like to refresh just specific tables or partitions, I refer to the script in the Appendix.

.

3. Fix Power BI access and settings.

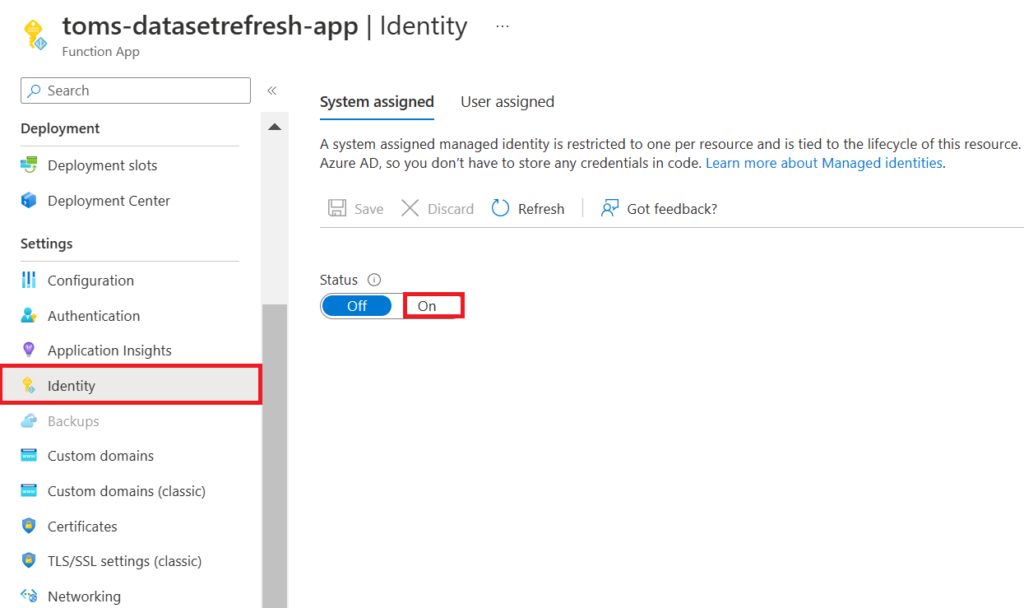

Turn on the System Assigned Managed Identity, if it has not already been activated:

.

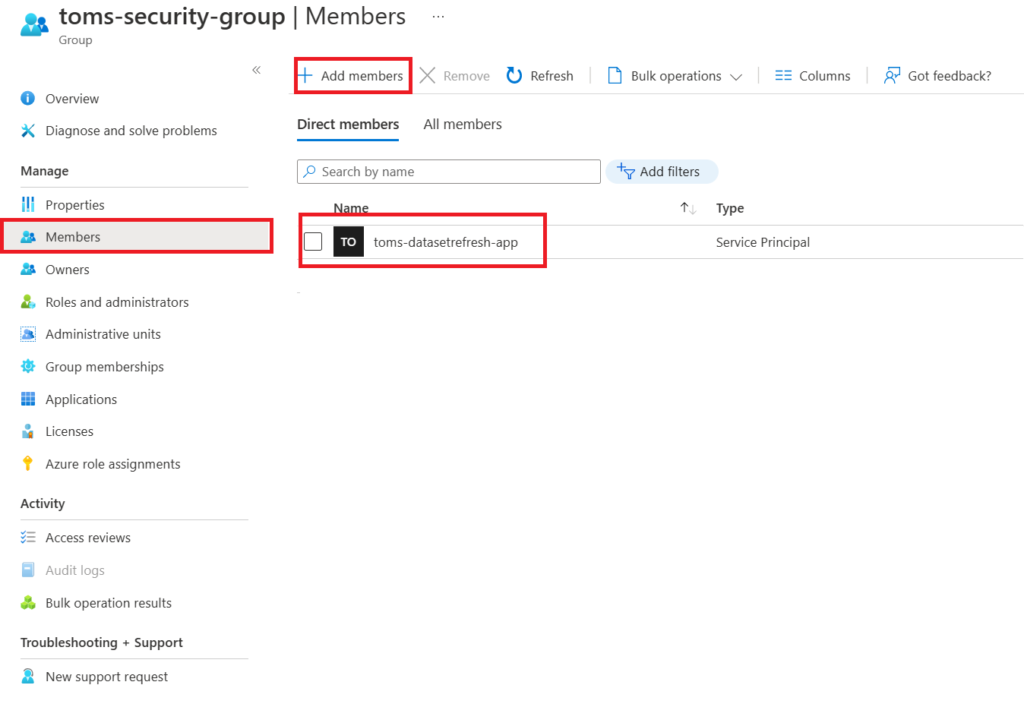

Then, create a security group in Azure and add the function app as a member:

.

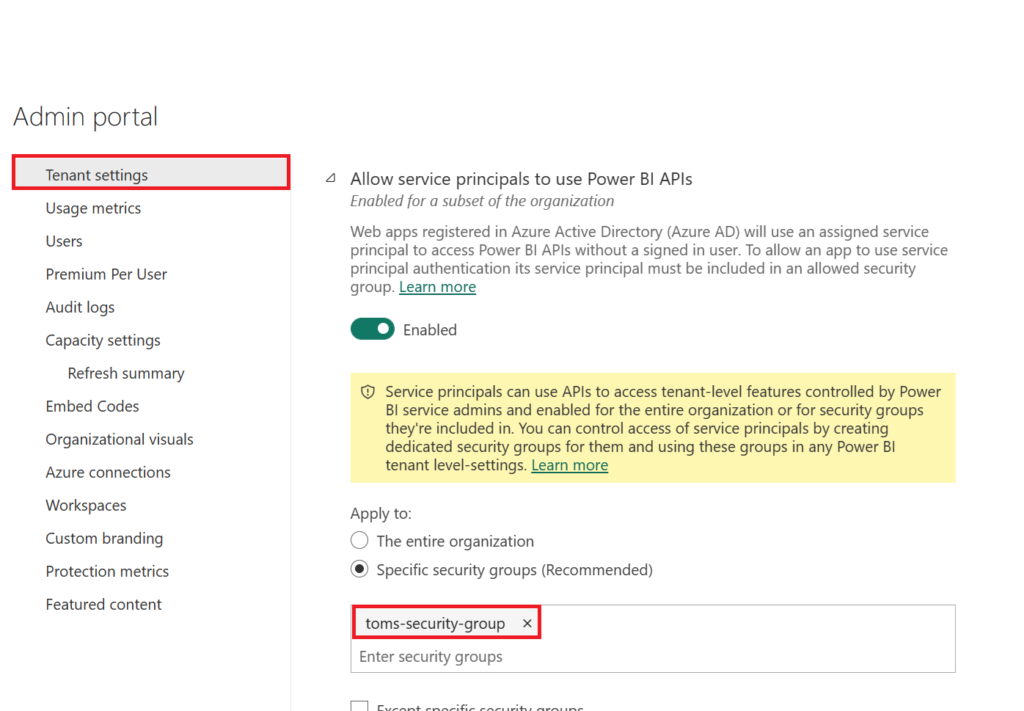

In the Admin portal, add that security group to the Admin API setting that allows service principals to use Power BI APIs.

.

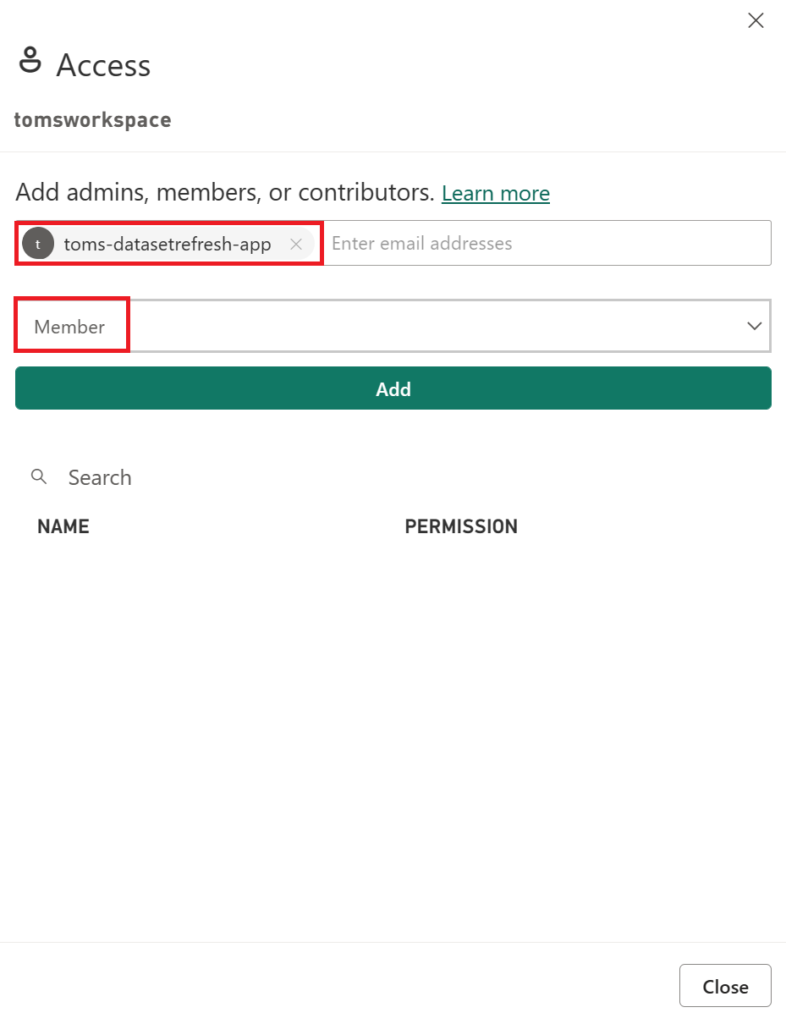

In the following, go into Power BI and add the function to your workspace with the Member role.

.

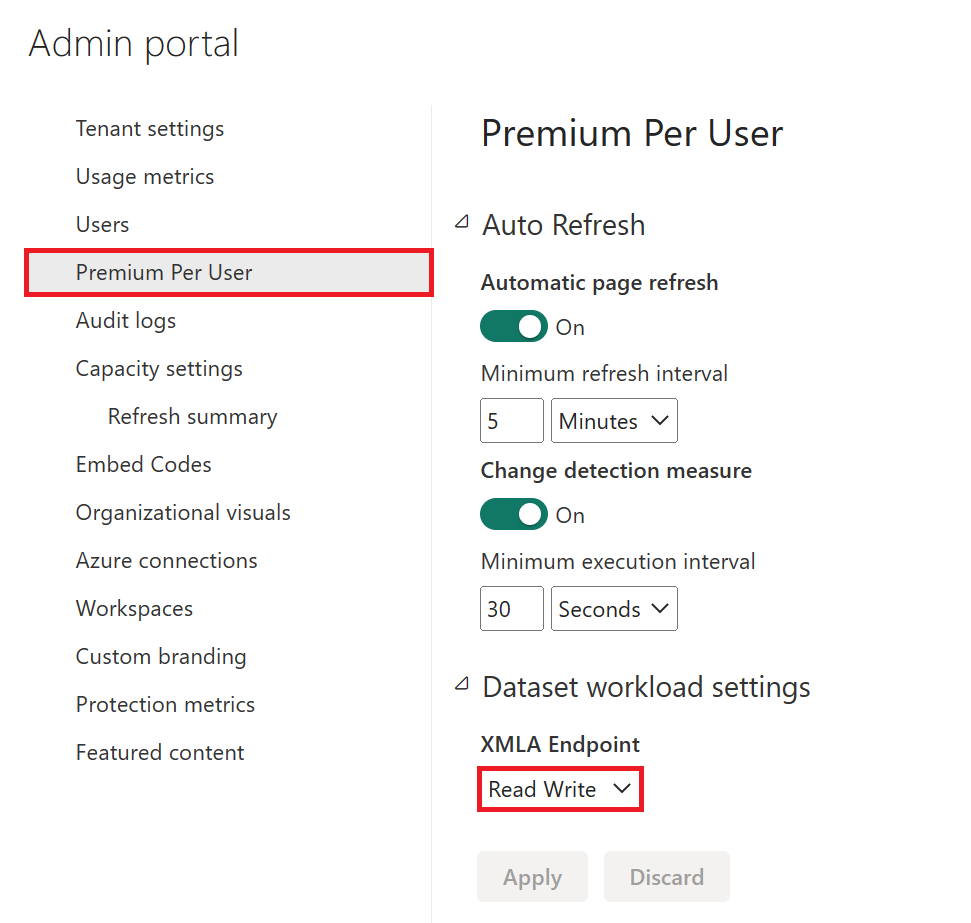

We are almost there! We only need to tell Power BI to allow read/write on XMLA endpoints. For this, navigate to the Admin portal and click on Premium Per User (or Capacity settings depending on what you are using). Finally, pick Read Write in the XMLA Endpoint dropdown:

.

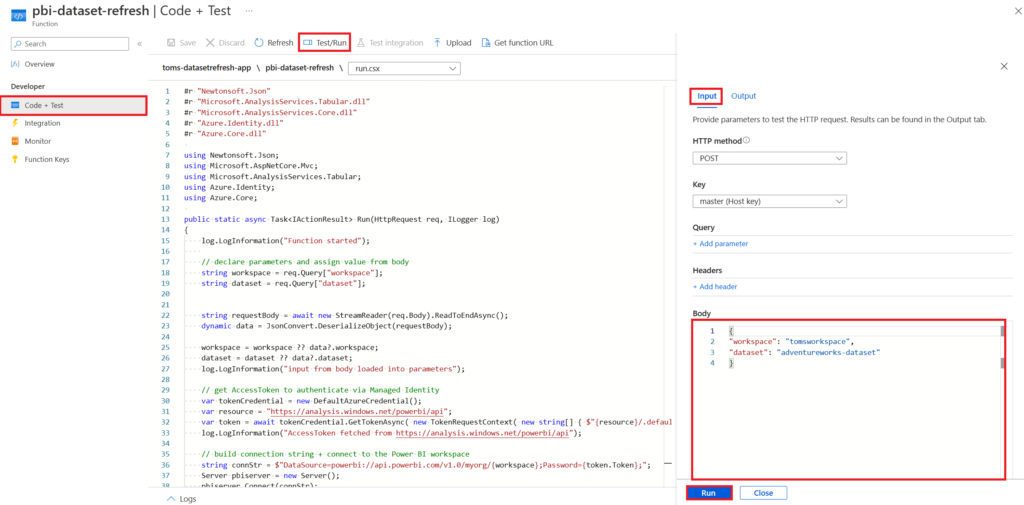

4. Showtime!

Now showtime! Let’s run it!

.

For the example above, we use the body below:

{

"workspace": "tomsworkspace",

"dataset": "adventureworks-dataset"

}

.

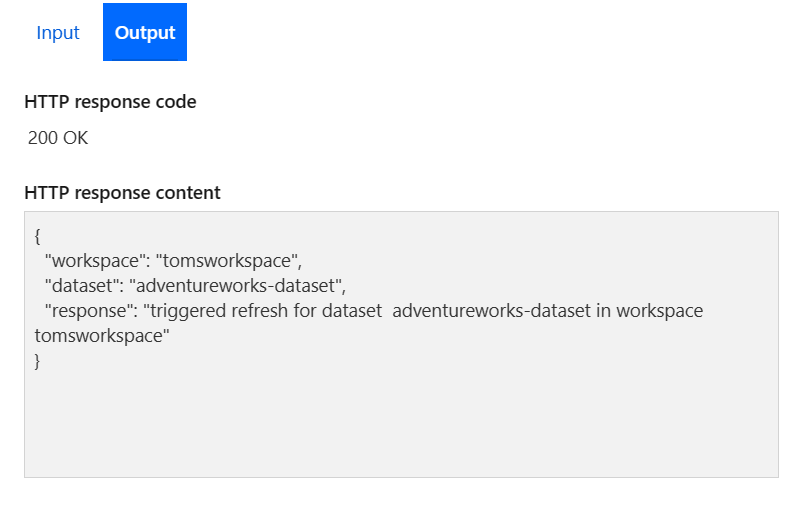

In the following, the response we should get back:

.

And here the refresh history:

.

end.

That’s it! As mentioned earlier there are several different refresh types, some of them involve the whole semantic model while others just process tables and / or partitions. If you would like to find out more about how to create and manage custom partitions in Power BI, I recommend to have a look into this blog post. Also, check out the appendix below for an example on how to process only specific tables or partitions with Azure Function. This can be useful in many use cases, certainly if you’d like to reprocess a partition right after you have changed the partition’s boundaries.

.

appendix.

If you’d like to just refresh one specific table instead of the whole model, just substitute the marked line accordingly:

// start refresh

m.RequestRefresh(RefreshType.DataOnly);

m.SaveChanges();

log.LogInformation("Power BI dataset refresh triggered");

// start refresh

m.Tables["DimCustomer"].RequestRefresh(RefreshType.Full);

m.SaveChanges();

log.LogInformation("Power BI dataset refresh triggered");

.

In the example above, DimCustomer illustrates the respective table to refresh. You probably would want to parameterise this part as well, where the table to reload originates from the body as an input parameter.

// start refresh

m.Tables["FactInternetSales"].Partitions["PreviousPeriod"].RequestRefresh(RefreshType.Full);

m.SaveChanges();

log.LogInformation("Power BI dataset refresh triggered");

.

In the example above, FactInternetSales illustrates the respective table and PreviousPeriod the respective partition to be processed. Also here, parameterisation would be meaningful.