how to automatically sync data from your import mode semantic models to delta tables in onelake.

introduction.

This feature is a great one! I feel like it kinda went a little bit under the radar with all the other recent releases! Indeed, there is so much going on these days.

So in this post, I wanna show you how to automatically export data from your semantic models in import mode to Delta Tables in your OneLake. In all honesty, I actually thought I could walk you through a data pipeline or python script where you could trigger such an export (similarly to when we triggered a refresh or pre-warmed a semantic model). But all of this is already handled by Fabric. It’s actually only a one-time setup. So no pipeline, no dataflows, no Python or PowerShell script is needed. You just set it up once, and Fabric simply takes care of all the exporting and updating for you.

Ok, but why would you want to do this in the first place? Well, as soon as the Delta Tables are available in your OneLake, you can do all the beautiful things that you are used to with Fabric, like creating shortcuts to your Lakehouse and loading it into a Notebook. Cool right?

As a disclaimer, at point of writing this post, the feature was still in preview.

prerequisites.

1. A Fabric capacity and workspace

2. A Power BI semantic model in import mode. In our case here, the model and report is called random_number_report. It is a report that is based on an API exposing a different set of random numbers with every call. You can check out this blog post to learn more about it.

1. What’s the goal?

The goal is to setup an automatic export of the tables in your semantic model (import mode) to Delta tables in OneLake. Anytime the model is refreshed, the tables are updated in OneLake, too!

2. Download OneLake file explorer

You do not need the file explorer per se, but it’s a great tool to check out what is going on in your OneLake anyway. Just follow the steps described on Microsoft’s official website to get started. I actually wonder whether Microsoft is planning to provide such a “OneLake view” within the actual Fabric experience as well.

Anyway, when you are done and you have connected it properly, you can see why they call OneLake also the OneDrive for data, simply because it just looks like it:

In the case above, we can only see a Lakehouse and nothing else, although we do have a semantic model in import mode in our workspace, too:

This is because only the Lakehouse’s tables can be found in OneLake so far. But we are about to change this!

3. Enable OneLake integration for the semantic model

First, we need to enable the large semantic model storage format. I asked myself, why we would need that, and I think it has to do with this one here:

“While required for semantic models to grow beyond 10 GB, enabling the Large semantic model storage format setting has other benefits. If you’re planning to use XMLA endpoint-based tools for semantic model write operations, be sure to enable the setting, even for semantic models that you wouldn’t necessarily characterize as a large semantic model. When enabled, the large semantic model storage format can improve XMLA write operations performance.”

After that, we can toggle on the new setting OneLake Integration:

However, before the tables will appear in the explorer, we need to trigger one refresh:

In order to b able to see the data in our OneLake explorer, we need to right click the workspace folder in the file explorer and click Sync from OneLake:

Finally, the new folder has popped up in our OneLake view, too.

4. Create shortcut from your Lakehouse to OneLake.

Next, we create a shortcut from that folder to the existing Lakehouse by first clicking on the three dots and then New shortcut. After, just follow the subsequent windows, which are rather self-explanatory.

After successful completion, we should be able to see the table in our Lakehouse under Tables.

Now, we can even query the table in a notebook:

showtime.

Up to this point, we just set the whole thing up. Now, we wanna actually test and see how (well) this feature works.

Before we refresh the Power BI semantic model again in order to check out whether the new data is also reflected in our Lakehouse, let’s first look at our current report. The table visual below displays all the data there is in the model (a series of random numbers). And as we can see, this is the same data as when we queried the Delta Table in our notebook (see above):

Now, let’s refresh the report. The refresh calls the randomnumberapi returning a different set of random numbers. You might need to additionally click on the refresh button in the report view in the top right. Note, that button does not refresh the semantic model but instead updates just the visuals:

When querying the shortcut Lakehouse table, the changes shall also be reflected there – and it does. Pretty cool, huh?

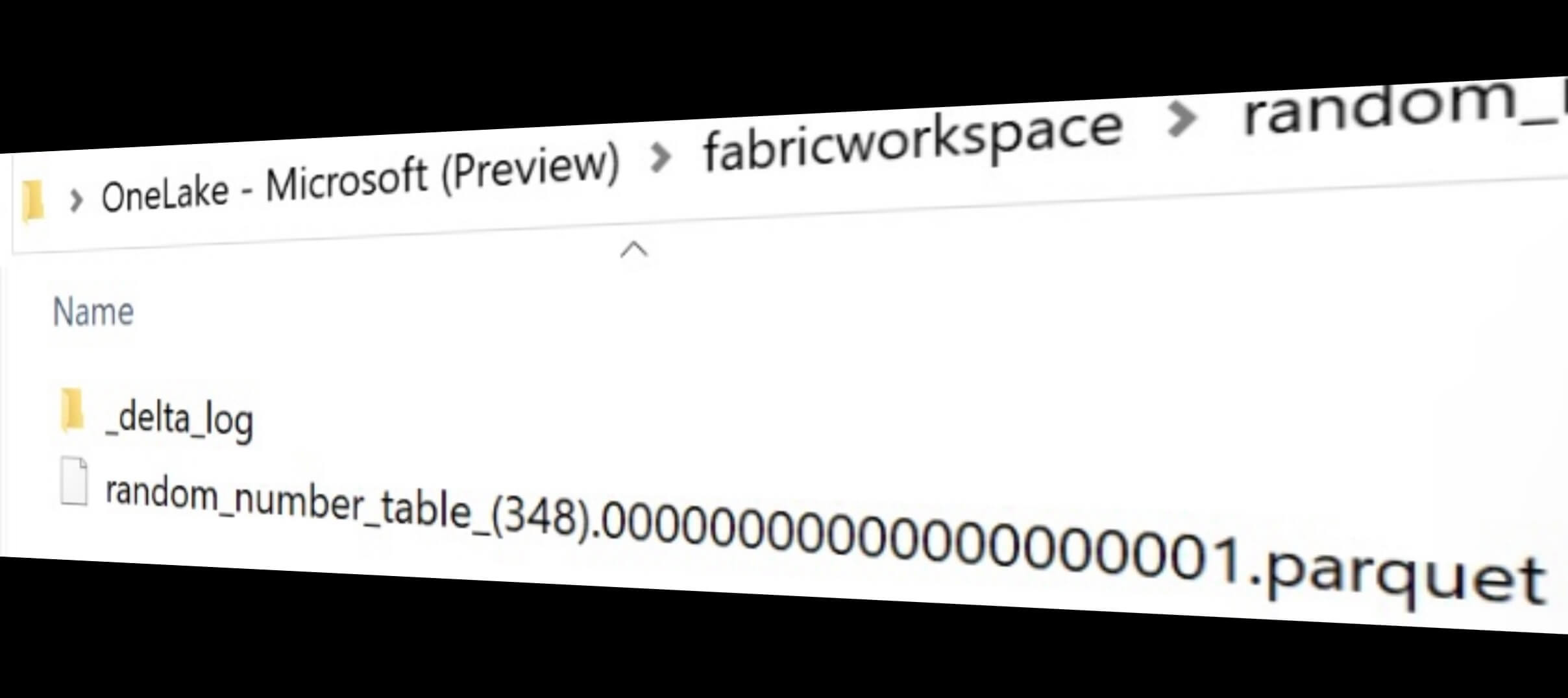

One last thing I noticed is that even after several refreshes, there is only one file apparent:

This shows that the well-appreciated time travelling feature is not possible (yet?). I tried different things to work around it and to actually enable version querying. Long story short, I did not succeed :D But I documented the steps in the appendix. Here the explicit explanation of this limitation on the official Microsoft documentation:

end.

Personally, I think this is a great way to get started with Fabric and the concept of Lakehouses. Also, this could be a natural starting point when migrating over to Fabric: Instead of rebuilding all the ETL and backend into Fabric workspaces, why not starting the migration on the other end by getting the semantic data from your import models straight into your Lakehouse. I’d personally would love to see the versioning / time travelling feature at least as a setting to opt in, but maybe that is coming at some point.

appendix.

As promised, here some queries I tried out in order to enable the time travel feature for Delta Tables. In fact, the different versions are tracked and can be displayed:

But when querying a version that is not the latest one, the error “org.apache.spark.SparkFileNotFoundException: Operation failed: “Not Found”, 404, HEAD” is thrown:

Here the Delta Table’s properties:

Unfortunately, it was forbidden to add, change or adjust the table properties of those Delta Tables: